Defense Against the (Cyber) Dark Arts - Managing LLMs as if They Were AI House-Elves

Evaluate your spending

Imperdiet faucibus ornare quis mus lorem a amet. Pulvinar diam lacinia diam semper ac dignissim tellus dolor purus in nibh pellentesque. Nisl luctus amet in ut ultricies orci faucibus sed euismod suspendisse cum eu massa. Facilisis suspendisse at morbi ut faucibus eget lacus quam nulla vel vestibulum sit vehicula. Nisi nullam sit viverra vitae. Sed consequat semper leo enim nunc.

- Lorem ipsum dolor sit amet consectetur lacus scelerisque sem arcu

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic elementum purus

- Eget at suscipit et diam cum. Mi egestas curabitur diam elit

Lower energy costs

Lacus sit dui posuere bibendum aliquet tempus. Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget. Quisque scelerisque sit elit iaculis a.

Have a plan for retirement

Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget.

Plan vacations and meals ahead of time

Massa dui enim fermentum nunc purus viverra suspendisse risus tincidunt pulvinar a aliquam pharetra habitasse ullamcorper sed et egestas imperdiet nisi ultrices eget id. Mi non sed dictumst elementum varius lacus scelerisque et pellentesque at enim et leo. Tortor etiam amet tellus aliquet nunc eros ultrices nunc a ipsum orci integer ipsum a mus. Orci est tellus diam nec faucibus. Sociis pellentesque velit eget convallis pretium morbi vel.

- Lorem ipsum dolor sit amet consectetur vel mi porttitor elementum

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic interdum id risus laoreet

- Amet blandit at sit id malesuada ut arcu molestie morbi

Sign up for reward programs

Eget aliquam vivamus congue nam quam dui in. Condimentum proin eu urna eget pellentesque tortor. Gravida pellentesque dignissim nisi mollis magna venenatis adipiscing natoque urna tincidunt eleifend id. Sociis arcu viverra velit ut quam libero ultricies facilisis duis. Montes suscipit ut suscipit quam erat nunc mauris nunc enim. Vel et morbi ornare ullamcorper imperdiet.

In my prior post, I shared the details of what happened when I successfully executed a malicious injection attack in ChatGPT. Today, I’d like to take a step back and share some thoughts about how you can balance the promise of LLMs with the stark reality of the gaping security holes they introduce to your environment.

I've noticed (and you probably have too) that the wider media is treating LLMs and ChatGPT as if they are almost magical, and it got me thinking about the importance of demystifying some of the nuts and bolts behind the magic so that we can have a more pragmatic discussion about how to harness it safely. So, what better way to start than with a magical analogy right out of Harry Potter?

Why LLMs Are So Alluring

Before we board the train to Hogwarts, I will set the stage.

Large language models – aka LLMs – are taking the world by storm, and suddenly everything that we know about how to protect our applications seems to have gone out the window. How did this happen overnight?

While the research into Generative AI was born long before the hype of today, I have to admit that there is a certain thrill to treating the same browser that I use every day as a new interactive AI helper. OpenAI and ChatGPT have brought that generational leap in AI technology to the mainstream. But beyond the ways that people are using it today in the public domain, my main intrigue about LLMs is how to get the benefits without opening myself up to the scary risks of depending on a probabilistic black-box that hasn’t quite been perfected yet.

Some of the main use cases that have emerged are search, querying all kinds of data in natural language vs SQL, automatic summarization of meetings, and dare I say - taking action beyond just the reading and summarization of tasks! All of these use cases fit a pattern: we have lots and lots of data in the enterprise, and the traditional ways of deriving value from it by, say, spending hours of time analyzing the data in Excel spreadsheets or actioning that data through cumbersome tools isn’t working anymore. Wouldn’t it be nice to have a helpful AI chatbot and assistant that we can talk to in natural language that can then go and make sense of all the data we have in our work lives and then ask it to actually perform certain tasks based on its super-human, almost “magical” comprehension of that data?

The Idealistic Vision For “Melbourne,” My Very Own AI House-Elf

Being an engineer building large scale cloud deployments and applications and knowing how painful it is to manage them, I’m intrigued by the idea of throwing all the operational data from Kubernetes, application logs, infrastructure logs into an LLM and basically treating it like my own personal house-elf.

Since Dobby is resting in peace, I would perhaps call my new LLM Melbourne ;), build a query interface on top of it, and declare “Talk to your data!” - and then watch as Melbourne fixes all of my operational headaches with perfectly accurate and coherent answers when I ask it things like “which buckets in my AWS account are public and who is accessing them in the last hour?” Or “which of my applications has a high error rate with a specific error code?”

Beyond querying, I would like Melbourne to actually help me take action in my cloud environments so I don’t have to learn Terraform or the dark magic that is Cloud Formation. Melbourne should be good at that, since it was born as a creature of the same dark magic itself. Knowing how S3 bucket misconfigurations happen all the time and how risky they are, I could ask Melbourne to create a rule that alerts me whenever a bucket with a public access policy is created, and then I could ask it to change the setting to private. Imagine applying this workflow across all of my cloud environments at scale - something very easy for an AI house-elf to do with the touch of its virtual finger! Sounds like a nifty solution to all my complex problems!

But how safe is it really to let loose an AI house-elf in my cloud environment? Remember all those instances when Dobby didn’t exactly do what Harry Potter wanted him to do? Remember how his subtle misinterpretations of Harry’s seemingly clear commands ended in chaos over and over again? Would you want Dobby in charge of your cloud-native security without any insight into what exactly it is he’s doing for you with his best-intentioned magic?

The Pitfalls of LLM Application Architecture and What They Mean for Security

While LLMs are capable of accomplishing many great things, I am not jumping up and down yet to build this magical cloud superpower “house-elf” inspired bot. I’m actually quite alarmed by the rush to deploy LLM-based applications without paying attention to the very new, dangerous, constantly evolving, and yet poorly understood security risks involved in deploying them. So while the space of LLM-based apps is still the wild wild west, I’ve dug my heels in with the skeptical eye borne of many years at the intersection of ML and security to unpack what is involved in the newest state-of-the-art approaches when building these new classes of applications.

As a distributed systems engineer building security apps, I’m always deeply interested in the interfaces that connect different systems, what is transferred between them, and how those interfaces can trust each other to do the right thing. Basically, I’ve been studying the “magic” behind ML and AI for a very long time, so I have a particular interest in trying to understand the system interfaces into and out of LLMs within these applications to then understand where the security gaps are and where they might emerge imminently as technology continues to leap forward at an almost magical speed.

At their most basic function, LLMs work by trying to predict the next word in a sentence that is relevant to the current context in the question or task at hand. Seems straightforward, but remember how often Dobby conveniently misinterpreted context or requests to favor his own plan? He had no malice, just as Melbourne wouldn’t, and yet, what he did with those commands was not what he was actually supposed to do. Let’s dig a little bit deeper into the technology to understand how Dobby’s innocent misinterpretations could be a harbinger of Melbourne’s helpful tasks also going awry.

The Devil’s In The Details - Context in the Case of LLMs

Context is a multi-dimensional concept within LLMs that helps add more relevance to the model’s output. I think of it as supplying direction to the LLM in terms of either additional content and/or additional prompts so that the capability of the LLM to predict the next word in a sentence or an action becomes more specific to the desired outcome. An example illustrating this could be an LLM based on a foundation model such as GPT-4, but tuned with additional data and context specific to a domain (such as the cloud data in the previous example) to help it customize its answers to the cloud domain. This additional context may be made available to the LLMs through embeddings representing external content in a vector database, or via APIs that the LLM can invoke.

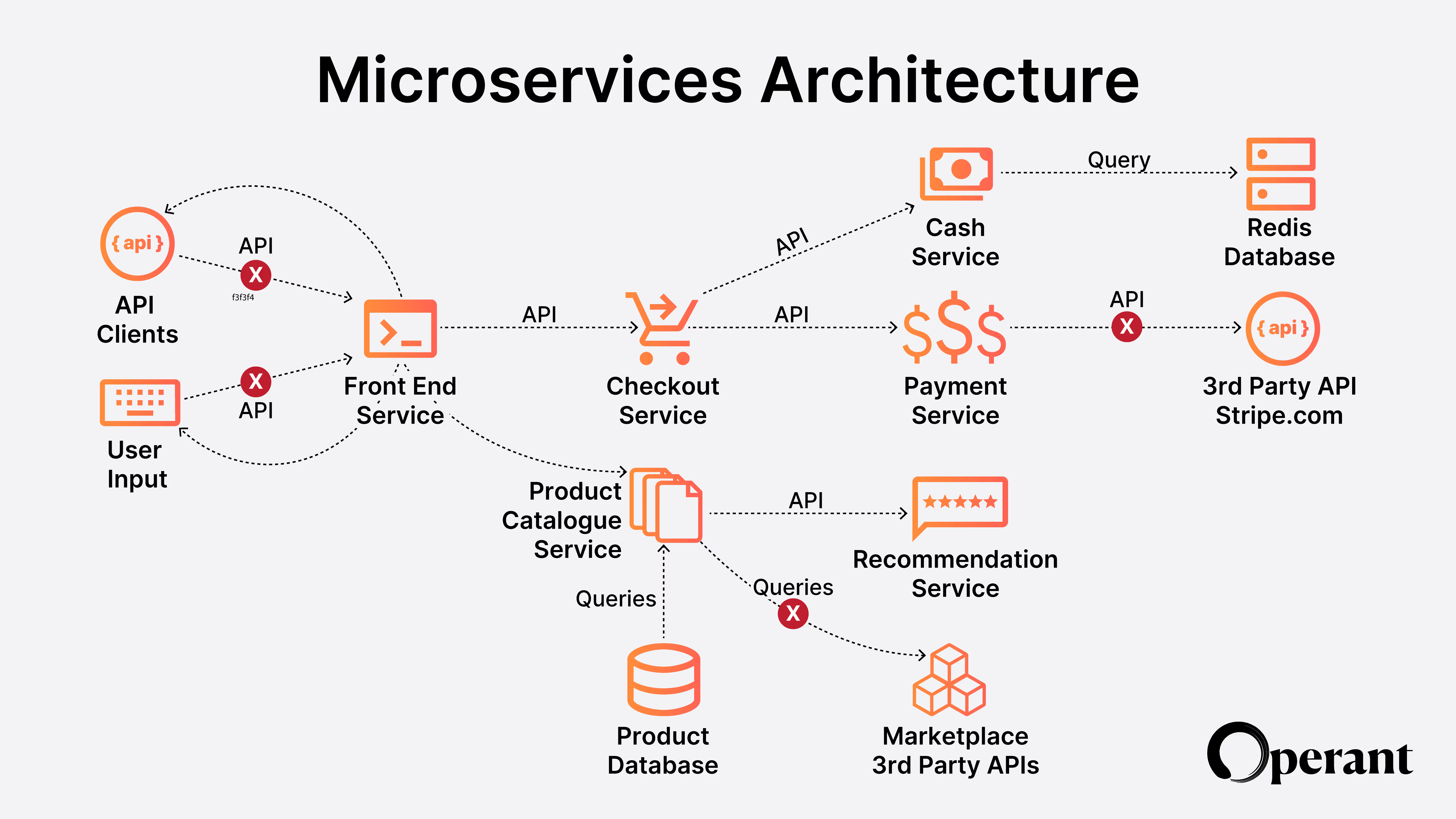

Usually on the output side of the LLM, they output text as natural language which could be answering the question, or as structured output following a format as instructed by the input command. But an LLM is not isolated. For LLMs to be useful beyond search, they need to take action, which has led to a wide array of integrations or plugins that can connect with LLMs and take instruction from its output to go and perform a task. This pattern of text input -> LLM -> text/action output can be chained together repeatedly to create workflows that process and manipulate data in different ways and take action.

If this is starting to look like chained microservices that communicate with each other through APIs, using both internal and 3rd party APIs, while connecting to data systems of different kinds, it sort of is that same pattern but with more unstructured text or numerical representations of text (embeddings) being transferred back and forth compared to the somewhat structured nature of APIs and, say, SQL queries. Makes one wonder if the LLM pattern would end up replacing APIs and SQL at some point in the future?

In my prior post I proposed a surprising parallel - LLMs as the new APIs. If LLMs were to replace APIs and SQL, it is highly likely that LLM-based applications will inherit the vulnerabilities found within APIs and data queries and make them ever more dangerous and difficult to control. A case in point proven by how easy it was for me to succeed at a malicious injection attack. It is no coincidence that OWASP has come out with updated guidance by listing their new top 10 attack vectors for LLMs, threats that go beyond the APIs of today.

Stepping Up the Game For A More Secure Present and Future

How does one go about solving some of these security challenges while still reaping the benefits of LLM capabilities? Despite his bumbling, everyone would still love to have a Dobby in their life, so how do we move forward without opening ourselves up to more attacks?

In the third installation of this series, I’ll cover how to map known security defenses to new apps, how to create a new chain of trust when linking LLMs to plugins, and how to bring new forms of runtime protection to secure against the new attack vectors raised by LLMs.

Meanwhile, if you'd like to discuss how Operant can help secure your AI house-elves, let's chat: info@operant.ai

Stay tuned!

3%20%3D(Art)Kubed%20(16%20x%209%20in)%20(7)-p-1080.avif)

.png)