Microservices and Machine Learning: The Yin and Yang of Modern Applications

Evaluate your spending

Imperdiet faucibus ornare quis mus lorem a amet. Pulvinar diam lacinia diam semper ac dignissim tellus dolor purus in nibh pellentesque. Nisl luctus amet in ut ultricies orci faucibus sed euismod suspendisse cum eu massa. Facilisis suspendisse at morbi ut faucibus eget lacus quam nulla vel vestibulum sit vehicula. Nisi nullam sit viverra vitae. Sed consequat semper leo enim nunc.

- Lorem ipsum dolor sit amet consectetur lacus scelerisque sem arcu

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic elementum purus

- Eget at suscipit et diam cum. Mi egestas curabitur diam elit

Lower energy costs

Lacus sit dui posuere bibendum aliquet tempus. Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget. Quisque scelerisque sit elit iaculis a.

Have a plan for retirement

Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget.

Plan vacations and meals ahead of time

Massa dui enim fermentum nunc purus viverra suspendisse risus tincidunt pulvinar a aliquam pharetra habitasse ullamcorper sed et egestas imperdiet nisi ultrices eget id. Mi non sed dictumst elementum varius lacus scelerisque et pellentesque at enim et leo. Tortor etiam amet tellus aliquet nunc eros ultrices nunc a ipsum orci integer ipsum a mus. Orci est tellus diam nec faucibus. Sociis pellentesque velit eget convallis pretium morbi vel.

- Lorem ipsum dolor sit amet consectetur vel mi porttitor elementum

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic interdum id risus laoreet

- Amet blandit at sit id malesuada ut arcu molestie morbi

Sign up for reward programs

Eget aliquam vivamus congue nam quam dui in. Condimentum proin eu urna eget pellentesque tortor. Gravida pellentesque dignissim nisi mollis magna venenatis adipiscing natoque urna tincidunt eleifend id. Sociis arcu viverra velit ut quam libero ultricies facilisis duis. Montes suscipit ut suscipit quam erat nunc mauris nunc enim. Vel et morbi ornare ullamcorper imperdiet.

Recently on a rainy Saturday, I rewatched the 2011 Woody Allen movie Midnight in Paris. I’d forgotten how this odd romantic dramedy had managed to explore the question of what it would feel like to dip into parallel universes, especially ones where you could experience and influence the works of some of the brightest minds in history, knowing the context of how their works will contribute to cultural disruptions for years to come.

Today, as the world of software engineering is standing on the precipice of the next wave in modern applications, I have been thinking a lot about the foundations upon which this next phase will be built, and I realized that actually, two parallel universes over the last couple of decades have brought us here.

They are just on the edge of fully converging.

Machine Learning needs Microservices

Incidentally, starting in 2011, Stanford professors like Andrew Ng, Peter Norvig and Sebastian Thrun launched the online movement with MOOC, that has since changed the lives of software engineers, mathematicians and philosophers alike. Their courses turned the spotlight to the depths of neural networks, deep learning and data systems with a level of simplicity and scale that had not been achieved in the previous five decades of all Artificial Intelligence research.

Between the brilliance of new people learning these concepts and the power of cloud computing, we saw massive breakthroughs in Machine Learning model accuracies, robustness and applications across many markets and use cases. What became crystal clear really fast was that modern applications are going to have a massive transition from being primarily logic-driven (from the days of flowcharts and code cards) to being data-driven. Now, in 2022, Machine Learning has become a major pillar for all applications.

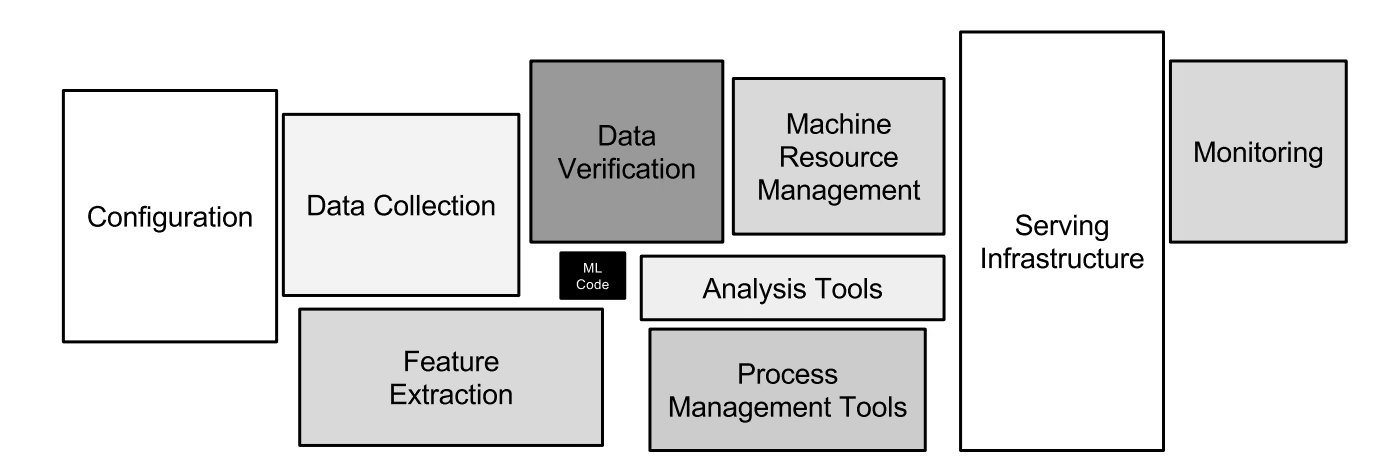

While ML models and algorithms were seeing massive breakthroughs in the “research labs,” deploying these models from training to production faced many complexities (check out the hidden technical debt of ML systems for a good take on what it takes to productize Machine Learning). During the early days, engineers had to move workloads through different pipelines and re-write code from scratch, which pushed many companies on the ML frontier to work together on various standards. Can you imagine the frustrations of re-working complex algorithms and underlying data pipelines? Back when I was heading up ML at Arm, we helped define ONNX, one such standard, that enabled ML model deployment across millions of devices. In many ways, it was planting the seeds of ML across the Edge - seeds that now enable a wide range of functionality on phones, industrial systems, and autonomous vehicles, all systems that users depend on every day.

Over the years, many techniques and projects have come about to support model serialization, and one of the most powerful and preferred approaches is through Microservices. Now engineering teams can work together to transfer Machine Learning models to production by running native Microservices and API endpoints with support for inferencing, versioning, auto-scaling, and security capabilities.

Among the customers we have been talking to, this pairing of ML and Microservices is growing fast. Perhaps in 2015, ML innovation was largely thriving in giant companies like Apple, Google and Netflix. But now ML is everywhere, even within operational companies where ML and Infra skills are not native to their core business. Now, Machine Learning needs Microservices more than ever.

Microservices needs Machine Learning

Shifting into a different parallel universe in the 2010s, the world of operating systems and distributed systems was seeing innovations of a different kind. With the Linux distributions picking up the open innovations on operating system-level virtualization, the world of virtual machines and cloud applications was about to be disrupted.

The growing support for kernel cgroups and isolated namespaces allowed for multiple isolated applications to execute without the need for starting new virtual machines. These new concepts of containers and Microservices-based design patterns accelerated other changes in cultures within software teams. Many forward thinking teams started building modular systems with the newfound agility, architected applications for performant resource utilization, and started experimentation of new versions of services and APIs, which wasn’t possible previously without affecting the entire monolith application. With this growing march towards Microservices and containers, new ways to rethink the entire software stack from the core became inevitable.

From a bystanders viewpoint, this disruption is hard to comprehend. While we might need a different blog post to break apart the myths, the top three points we come across are:

- Microservices adoption is negligible, outside of the “mega companies”

- Older tools can do everything, since they have been supporting ALL applications

- With so many open source projects for Microservices, there’s nothing more needed in the ecosystem

The truth is, what we are seeing within customer stacks and engineering teams has been an eye-opening experience.

We are not talking about “mega companies” like Uber or Citibank or T-Mobile, where large engineering teams are dedicated to tackle the scale that isn’t commonly seen. Engineering teams across all industries like High Tech, FinTech and banking, E-Commerce and online marketplaces and beyond, are in the midst of multi-quarter Microservices migration projects. Whether these businesses are building to be API-first, Machine-Learning driven, or IoT-powered, it is very clear that modern applications are built with Microservices.

And the scale within these green-field adopters is mind-blowing. As an example, one of our unicorn customers runs 100s of clusters with 200+ services each, with daily releases of new APIs and services as and when possible. With the scale and volume of data being generated from these systems, engineering teams are struggling to keep up with daily or hourly changes in their highly dynamic environments - often stuck in a reactive loop applying fixes after breakages happen.

Human response cannot tackle the scale and complexity of such systems. And in a roundabout way, Microservices now need Machine Learning.

In short, Microservices made Machine Learning mainstream, and now Machine Learning is needed to make Microservices mainstream.

As enterprises cross the chasm towards Microservices and Machine Learning – like the Yin and Yang of modern applications, the convergence of these technologies will cause a paradigm shift far greater than the geniuses of The Lost Generation in 1920s Paris (or Woody Allen) could ever imagine. I am excited to see what the rest of the 2020’s have to offer.

3%20%3D(Art)Kubed%20(16%20x%209%20in)%20(7)-p-1080.avif)

.png)

.png)