Not Quite Thomas Crown - What Cybersecurity Teams Can Learn From the Recent British Museum Thefts

Evaluate your spending

Imperdiet faucibus ornare quis mus lorem a amet. Pulvinar diam lacinia diam semper ac dignissim tellus dolor purus in nibh pellentesque. Nisl luctus amet in ut ultricies orci faucibus sed euismod suspendisse cum eu massa. Facilisis suspendisse at morbi ut faucibus eget lacus quam nulla vel vestibulum sit vehicula. Nisi nullam sit viverra vitae. Sed consequat semper leo enim nunc.

- Lorem ipsum dolor sit amet consectetur lacus scelerisque sem arcu

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic elementum purus

- Eget at suscipit et diam cum. Mi egestas curabitur diam elit

Lower energy costs

Lacus sit dui posuere bibendum aliquet tempus. Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget. Quisque scelerisque sit elit iaculis a.

Have a plan for retirement

Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget.

Plan vacations and meals ahead of time

Massa dui enim fermentum nunc purus viverra suspendisse risus tincidunt pulvinar a aliquam pharetra habitasse ullamcorper sed et egestas imperdiet nisi ultrices eget id. Mi non sed dictumst elementum varius lacus scelerisque et pellentesque at enim et leo. Tortor etiam amet tellus aliquet nunc eros ultrices nunc a ipsum orci integer ipsum a mus. Orci est tellus diam nec faucibus. Sociis pellentesque velit eget convallis pretium morbi vel.

- Lorem ipsum dolor sit amet consectetur vel mi porttitor elementum

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic interdum id risus laoreet

- Amet blandit at sit id malesuada ut arcu molestie morbi

Sign up for reward programs

Eget aliquam vivamus congue nam quam dui in. Condimentum proin eu urna eget pellentesque tortor. Gravida pellentesque dignissim nisi mollis magna venenatis adipiscing natoque urna tincidunt eleifend id. Sociis arcu viverra velit ut quam libero ultricies facilisis duis. Montes suscipit ut suscipit quam erat nunc mauris nunc enim. Vel et morbi ornare ullamcorper imperdiet.

This week security has been at the top of the news - museum security, that is. After an embarrassing series of incidents in which an employee of the British Museum managed to “exfiltrate” precious artifacts from the museum’s collections over “a long period of time,” many people have started asking a question that hasn’t really come up with such scrutiny in the past: How secure are museums, really?

Museums are often assumed to be some of the most secure spaces on earth - just look at how much trouble Thomas Crown went to to steal that Monet! When people envision museum security, it is often reminiscent of a 90s movie with security guards in every gallery, imposing metal gates, CCTV, bullet-proof display cases, Entrapment-style lasers, and multiple locks on doors and cabinets while objects are waiting in storage. Yet, in the currently unfolding drama, authorities have identified over 1500 historical objects that have gone missing from the British Museum alone, and more are being identified as they start surveying the damage in earnest. As it turned out, all of those physical barriers weren’t enough to stop one over-permissioned employee who wasn’t being watched very closely.

Working in cybersecurity, this story sounds strangely familiar. An employee who sat quietly with too much access and not enough scruples stole precious data over a long period of time while no one was looking. Now that a breach has been identified, it’s hard to even uncover the scope of the loss, because all of that precious data wasn’t even properly surveyed and cataloged to begin with. It is a painful cycle of theft + damage control that is going down somewhere in the world every day in the art world and the cyber world, and it is becoming more dangerous than ever as the playing field evolves faster than teams and resources can keep up.

While the British Museum’s process to unearth the full scope of their breach has to be painstakingly manual, the ethos of such an excruciating wait is strikingly similar to the twiddling thumbs and sleepless nights that teams at companies like Capital One and Lending Tree have endured as they waited for the ugly details on what PII data was stolen in their famous breaches. That wait is about to be even more tenuous, now that the US SEC (Securities and Exchanges Commission) just adopted new regulations enhancing disclosure requirements for cybersecurity readiness and incident reporting. Starting as soon as December of this year, publicly traded companies will only have 4 days to disclose “material” breaches - a target that certainly the British Museum isn’t going to hit. But, surely, software engineering teams at tech companies should have no problem, right? Isn’t that what technology is for???

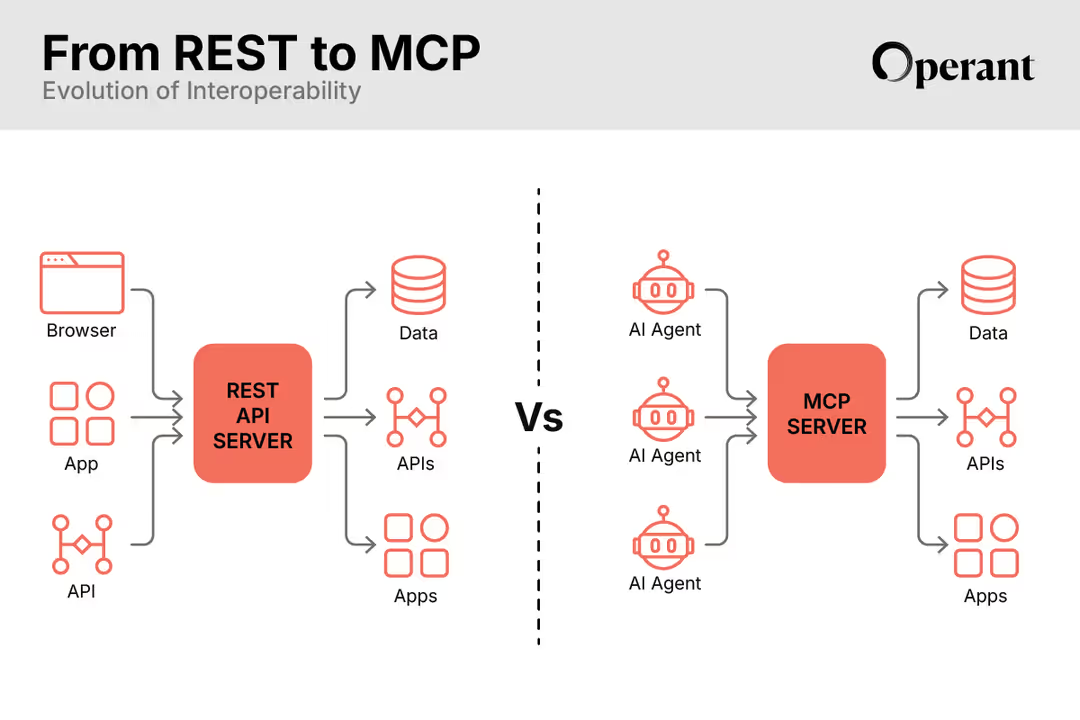

Yet, most companies are a far cry from being able to shut down a breach within the day, let alone report on the full scope, especially if data has been leaked or exfiltrated through hidden processes over a long period of time. The scale and complexity of Kubernetes environments makes this even harder in cloud-native environments (a topic we write about often), since most teams at most companies don’t even have a full picture of their service to service and API to API relationships, nor do they understand the real risk of lateral movement attacks enabled by grossly over-permissioned service accounts - the default setup in Kubernetes. But I don’t expect to see many companies slamming on the breaks of their cloud transformations until they get their snowballing risk under control.

Just as the British Museum was happy to take as many artifacts as possible and secure them with the system and resources they had (including its flaws), engineering teams are usually willing to take the benefits of Kubernetes and slap together whatever easy security they can so that they don’t let the pesky challenges of securing complex environments hold them back from their primary goal - growth. After all, unless there is an embarrassing breach, security is rarely in the headlines. Except, you know, when it is… with all sorts of unpleasant consequences that weren’t particularly top of mind for the years that someone was secretly pilfering precious antiquities right through the fingertips of one of the most famous museums in the world. Did I mention that Greece has asked for their antiquities back, now that it’s clear they aren’t as secure as everyone thought?

The reality is that public breaches of trust have consequences that go far beyond the loss of artifacts or customer data. They rock the foundations of what a compromised institution stands for, and they can set back that institution’s reputation irrevocably. In 2021, another bad-acting employee knocked $4 billion off the value of Ubiquiti after using his authorized access inappropriately to hack their systems, hold their data hostage and eventually release false reports of a cover-up.

While a museum full of dusty ancient objects may seem like the opposite of a virtual cloud environment, the parallels between them are surprisingly relevant. But other than a cautionary tale on vetting proper hires and a philosophical debate about which is a more palpable loss - your PII data or some dusty Roman coins (most people who aren’t historians would probably choose the former) - what can cybersecurity teams learn from this breach of museum security?

Combining Segmentation With A Least Privilege Access Model

This most recent incident isn’t the first public headache for the British Museum. In 2011, a Cartier ring that was not on public display went missing. While they “heavily invested” in security after the fact, the items stolen in the most recent incident were clearly not properly locked away. There is a simple solution to this problem that has been a core tenant in Zero Trust strategy since long before its cybersecurity context.

Had the museum organized their antiquities into tiers of importance, with the most important items gaining extra special security (say, an extra lock or two, or a security camera or motion detector here or there), with their least important items still having, say, at least one lock on a cabinet door, then it would have been a lot harder for the employee to pilfer them. Deterrence is itself a powerful tool, as is… a lock.

Now, presumably this employee had at least a few keys assigned to them, which is why they had so much more opportunity than the random member of the public who stole an ancient Greek statue out of an unguarded gallery in 2002, but surely they were not the head of security, nor some other similarly authoritative role that would have had all the keys assigned to them. It wasn’t a social engineering attack, in which a con artist swindles their way to stealing the keys, nor was it a case of falsified identity - the employee was working there over a long period of time, pilfering antiquities in between meetings and emails. So, with their standard level of employee access, they were able to continuously steal objects in a totally unnoticed way. The media has yet to reveal what job title this thief had, but one would be hard-pressed to argue that they needed access to thousands of artifacts every day in order to do their job. A core tenant of least-privilege is only giving an employee access to the resources (and keys) that they truly need to do their job, and yet, this employee was grossly over-permissioned.

But what is the root cause of over-permissioning to begin with? It’s easy to say that least-privilege is a desired state, but managing thousands of locks and a segmentation logic of ranked tiers of access is a complicated system requiring ongoing resources to manage. It is much easier and faster on a day-to-day basis to leave everything open… easier, but much more dangerous.

The parallels between this situation and securing a cloud-native environment are striking. Over-permissioned service accounts in Kubernetes are an unfortunate de facto norm at this point, as are sharing service credentials or API keys between engineers and other employees. Many engineers have access that they don’t need to do their jobs to all sorts of sensitive data via API to API and service to service hops within Kubernetes, and these connections should be locked down but almost never are, since managing ephemeral identities within K8s is a very challenging technology problem.

Just as it was difficult for the British Museum to manage keys for so many employees, especially with a combo of locks and keys from old and new systems, so too is it difficult for engineering teams to manage permissions for so many different types of runtime identities within applications, each with their own identification logic. This leaves application internals basically open to anyone who finds their way past the one external gate, and once a thief is inside - whether a nefarious employee out to sell PII data on the open market or a Thomas Crown style opportunist busting their way in through an Open API - “locks” (ie identity and access control policies) on the precious assets and the paths that lead to them are the only way to control blast radius.

Capture And Stop Unexpected Misbehavior In Action

While it’s easy to be snarky that someone should have noticed a red flag or two while interviewing these famously bad employees, the reality is that past behavior can only go so far in predicting the future. Just as an employee may become disgruntled or perhaps be a bad egg that never had the right opportunity to commit a crime in the past, employers are limited to the information they have when deciding whether to trust a new hire. Sometimes a past history of nefarious activities just isn’t there - if it were, obviously that person would not have been hired.

So, these bad-actor breaches are innately unpredictable - they are a new misbehavior manifesting that wasn’t anticipated during setup (or dev and staging), and yet their bad behavior went into action after they actually entered the live “production” environment (just like a zero-day vuln). This whole situation has some clear parallels to the pitfalls of depending too heavily on static analysis tools to identify and prevent cybersecurity breaches. Sure, they can help you weed out bad apples that look like known bad apples, but what about new modes of misbehavior?

Museums have CCTV and guards monitoring live activity (albeit with some major blindspots), but the British Museum still suffered from a similar problem to cybersecurity teams - there was simply too much data for humans to comprehend and despite having a lot of data, they didn’t spot the thefts in action. Sure, guards watching a security feed can catch some missteps, but they simply don’t have the resources to a) put cameras and motion-sensors everywhere and b) watch all the footage like a hawk 24/7. This is where technology should have a leg-up, and yet, most teams are still unable to monitor their live application traffic and pick up the right signals that need to be acted upon. They are either flooded with alert overload of irrelevant signals and endless Jira tickets, or they don’t have all the “cameras” setup as their heavy instrumentation monitoring lags behind their K8s expansion. Blind spots + too much data + no resources for immediate action sounds pretty familiar.

That’s why live monitoring of production environments with automated collection and prioritization of risk signals is so important to teams being able to act on risk. It isn’t enough to gather a bunch of footage that no one can watch, or even to set up motion sensors if they send off so many false positives that people start to ignore them. We need to use technology to sift through the signals and unearth the misbehaviors that matter, and then we need to shut them down instantly (like quarantining suspicious APIs, limiting API rate limits, and controlling CPU to prevent crypto mining) rather than waiting for a manual response from a team that is overburdened and under-resourced.

A Leak Unplugged Over Time Can Cause More Loss Than A Flood

As a final thought on this topic, I’d like to revisit the sheer volume of the British Museum theft - 1500 artifacts. It is a scale that would be unheard of in a single incident. While there are a handful of very famous paintings that have been brazenly stolen over the years in one-off incidents - including Munch’s Scream and even the Mona Lisa (also stolen by employees misusing authorized access), the number of stolen objects in the British Museum incident reflects a scope that can only happen over a long period of time. Similar damage adds up in cybersecurity incidents when hackers settle into blindspots and execute data exfiltration for days, months, or even years until their breach is finally noticed. Some famous incidents are now reaching billions of records stolen - a trend that is certain to continue as the amount of data and a team’s ability to make it useful becomes exponentially harder to manage.

While new attack vectors are also on the rise, the security challenges highlighted by the British Museum theft are age-old struggles around choosing the right people to trust and hardening security for when those choices turn out to be wrong. While issues of perfecting human trust aren’t going to get resolved any time soon, we do have technology to help support us in our quest to harden the security behind the front gates, and at Operant, we encourage everyone to use it.

To learn more about how Operant can monitor and secure live cloud-native applications, enforce least-privilege access controls within application internals across the SDLC, and reduce blast radius of bad-actor breaches, request a trial or email us at hello@operant.ai.

3%20%3D(Art)Kubed%20(16%20x%209%20in)%20(7)-p-1080.avif)

.png)