Reining in Shapeshifting LLMs by Supercharging Known Security Defenses

Evaluate your spending

Imperdiet faucibus ornare quis mus lorem a amet. Pulvinar diam lacinia diam semper ac dignissim tellus dolor purus in nibh pellentesque. Nisl luctus amet in ut ultricies orci faucibus sed euismod suspendisse cum eu massa. Facilisis suspendisse at morbi ut faucibus eget lacus quam nulla vel vestibulum sit vehicula. Nisi nullam sit viverra vitae. Sed consequat semper leo enim nunc.

- Lorem ipsum dolor sit amet consectetur lacus scelerisque sem arcu

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic elementum purus

- Eget at suscipit et diam cum. Mi egestas curabitur diam elit

Lower energy costs

Lacus sit dui posuere bibendum aliquet tempus. Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget. Quisque scelerisque sit elit iaculis a.

Have a plan for retirement

Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget.

Plan vacations and meals ahead of time

Massa dui enim fermentum nunc purus viverra suspendisse risus tincidunt pulvinar a aliquam pharetra habitasse ullamcorper sed et egestas imperdiet nisi ultrices eget id. Mi non sed dictumst elementum varius lacus scelerisque et pellentesque at enim et leo. Tortor etiam amet tellus aliquet nunc eros ultrices nunc a ipsum orci integer ipsum a mus. Orci est tellus diam nec faucibus. Sociis pellentesque velit eget convallis pretium morbi vel.

- Lorem ipsum dolor sit amet consectetur vel mi porttitor elementum

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic interdum id risus laoreet

- Amet blandit at sit id malesuada ut arcu molestie morbi

Sign up for reward programs

Eget aliquam vivamus congue nam quam dui in. Condimentum proin eu urna eget pellentesque tortor. Gravida pellentesque dignissim nisi mollis magna venenatis adipiscing natoque urna tincidunt eleifend id. Sociis arcu viverra velit ut quam libero ultricies facilisis duis. Montes suscipit ut suscipit quam erat nunc mauris nunc enim. Vel et morbi ornare ullamcorper imperdiet.

In my previous two blogs of this Secure LLMs blog series, I shared details about how surprisingly easy it was for me to hack ChatGPT with a prompt injection attack, followed by a deep dive into the rapidly evolving LLM-based application architectures and how their security foundations are inherently brittle. Building a non-deterministic AI that has uncontrolled access to large amounts of organizational data while simultaneously hooking it up with open access to untrusted 3rd party plugins and APIs can lead to critical security breaches at a catastrophic scale never seen before.

In short, the underlying probabilistic nature of the technology (kind of like a shapeshifter…) combined with a blind rush to adoption without any guardrails in place fuel dangerous permutations of unpredictable behavior in which bad actors can manipulate LLMs in surprisingly easy ways to achieve malicious outcomes. Stolen medical data? Check. Stolen social security numbers? Check. But it’s much more than that. Imagine as the adoption of LLMs exponentially increases, the systems that our very civilization depends on can become critically compromised - supply chain management that gets our food where it needs to go, software that keeps fire suppression systems on container ships running correctly so that we don’t have another billion dollar shipwreck, healthcare technology that keeps track of what medication you’re on so your doctor is sure to give you the right medical treatment - imagine if these systems were compromised how horrific the human impact could be.

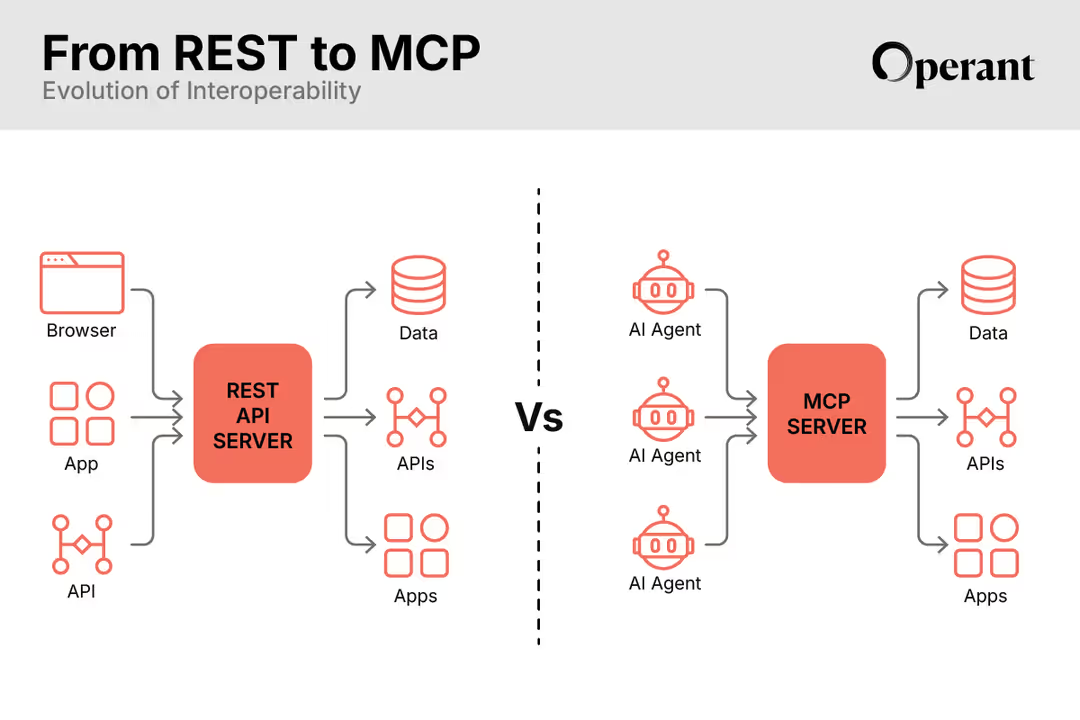

Yet, at the same time, there is no doubt that intelligent AI helpers based on LLMs will continue to be built creating new ways of interfacing with and actioning our ever growing data, perhaps even becoming the new APIs and SQL layers of tomorrow!

And so the paramount question becomes: how do we enable the magic of LLMs to help humans make the promised strides in all sorts of life-changing ways while still being secure and safe?

Mapping Known Defenses Against the Dark Arts to LLMs

The underlying motivations and techniques for attackers to gain access to an organization’s data and perform malicious actions with it have not changed with the arrival of LLMs. They have well-known foundations in types of runtime attacks such as:

- Horizontal and vertical privilege escalation

- Confused deputy

- Unauthorized access control

- Data poisoning with API overload

- Data exfiltration via plugin manipulation

What has changed, though, is the ease of access of these kinds of attacks. They are now at the fingertips of a single API call among thousands (or millions) that happen every day (and that are rarely, if ever, monitored in real-time). Frenzied development by application teams to deploy LLMs without considering how to deploy them securely has left massive gaps in identity and access controls that were already struggling with the increased adoption of cloud-based architectures. Add to that, the seemingly countless ways in which natural language based prompts can wreak havoc compared to anything seen before in attacks like SQL injection, and it is a trifecta of trouble descending all at once in a way that is truly unprecedented.

Before, during a SQL injection attempt, an attacker had a limited set of techniques to inject malicious SQL code (bounded by the SQL query syntax itself) like adding the well-known ‘OR 1=1’ condition to a SELECT query to fetch all records in a table when only one record was expected to be fetched. These sorts of SQL injection attacks could be stopped in well understood ways by sanitizing data inputs and adding explicit logic to the SQL engine (as SQL is regular code, not AI) that helps it distinguish between literal text and SQL code. However, with prompt injection attacks, all the attacker needs to do is coax the LLM in natural language - (a much more unstructured and unconstrained form of input vs SQL) to fall for a malicious instruction that’s indistinguishable from benign text so that the LLM reveals PII data for all users in its data stores or deletes important data - a simple trick I used to deceive ChatGPT in a surprisingly easy way. Being an AI black box, there is no foolproof way of explicitly telling an LLM how to distinguish between simple text and an instruction, thus making prompt injection a gaping unsolved security hole in LLM apps.

Security teams, platform teams, developers and engineering leaders need to act quickly - quicker than ever before - to at least match the pace of security with the pace of LLM application development. Attackers are already way ahead of where the defenses are, and many teams are at risk of falling further and further behind as they extend the “black boxes” deeper into their stacks without the teams or the knowledge or the transparency to manage them.

As the security thinking around LLM-based applications evolves, security teams should avoid feeling paralyzed by the arrival of this new technology that no one completely understands, but instead bring foundational security controls that they already know of to the front and center of their application security roadmap. From there, the task of adapting them towards LLMs becomes less amorphous and a bit more familiar, something that many of us would appreciate as we rush to keep up with the superhuman pace of change that we’re swimming in right now.

With that, let’s go into how commonly known foundational security controls can be adapted to address some of the known attack vectors created by LLMs and Generative AI.

LLM Isolation

Taking a cue from cloud-native microservice architectures, teams should carefully consider LLM application architectures and introduce design principles like separation of concern between different components of the LLM application. For instance, having a plugin interface component that serves as a middleware layer between external inputs and outputs from the LLM could be one way of isolating the LLM itself from carrying out external interactions directly.

LLMs being a black box, there is no way today of predictably introducing behavior that enables them to understand the security and trust implications of carrying out external communication such as a 3rd party API call to fetch some data or code or take action. Separating the application logic that actually carries out an “action” (like making an external API call) into a service different from the LLM, with proper access controls in place would segment the unpredictable LLM from the more structured non-LLM code that is capable of taking actions. Beyond the application code level segmentation, security teams could enforce segmentation policies externally so that the LLM service is always quarantined and never able to make external network calls, hence placing guardrails around how LLMs should behave.

Runtime Protections

Prompt injection unleashes a new class of runtime attack vectors where attackers could go through a series of prompt injection attempts to get the LLM to act in ways that achieve their desired outcomes. For instance, an attacker could then go on to maliciously access data in an S3 bucket or exfiltrate data containing various forms of sensitive PII data such as SSNs, medical records, or even voting records from an organization’s systems. This is an example of a lateral movement attack but in a new environment that exploits LLMs and plugins along the way. Security teams need to apply runtime protections against these runtime attacks to constantly make it harder for attackers to gain unauthorized access to systems. An example of a runtime protection technique would be having proper rate-limits in place at different points in the stack, as well as enforcing least privilege across different layers in the stack from LLM container runtimes to which APIs could an LLM access so that the attack’s blast radius is minimized.

Secure-by-Design LLMs

Secure-by-default principles where LLM-based applications are secure from the get-go is going to be ever more important, as attackers are currently far ahead of the defensive capabilities of teams deploying LLMs. Having guardrails in place that define secure-by-design behavior such as microsegmentation, least privilege, and rate-limits by default will help teams defend against the constantly evolving threats brought forth by Gen AI.

Building a chain of trust when daisy-chaining LLMs

Trust controls are virtually non-existent when chaining LLMs today as the invocation of plugins and 3rd party APIs to take actions do not have explicit security requirements that need to be enforced. The problem of insufficient access controls precedes LLMs but becomes all the more important as LLMs gain more capabilities to take actions via plugins in all sorts of use cases.

In the old world, firewalls and microsegmentation techniques would define trust relationships at runtime in terms of which IP addresses and subnets were allowed to talk to which other IP addresses and ports. I would argue that these techniques are still relevant in the age of LLMs, but need to be fundamentally adapted to the characteristics of LLM and microservice-based apps.

Zero trust in the era of LLMs can't be limited to checking IPs at the perimeter of a lifted and shifted VM. It needs to be fundamentally rebuilt to enable continuous service-to-service identity authentication, API-to-API, and service-to-data access controls within the live application environment when daisy-chaining LLMs and plugins. Those are the only ways that can stop nefarious plugin access in the live LLM application environment, limiting damage or preventing breaches that could have otherwise been catastrophic. Microsegmentation in the age of LLMs needs to be fine-grained enough to allow expressing behaviors like which plugins an LLM is allowed to talk to or which specific actions an LLM could take within a plugin’s API.

Conclusions

There is no doubt that LLMs will change the game when it comes to how we understand our data and harness it to take action and move businesses forward. However, teams need to carefully consider the security implications of deploying LLMs and have the right defenses in place to guard against their new attack vectors. Taking a proactive stance is no longer optional, it is the only way to avoid future calamities, but it is also an achievable goal. Security teams, platform teams, developers, and engineering leaders all have a major stake in harnessing the power of LLMs safely and securely, and the organizations that are able to strike the balance between security and innovation will be the real winners after the Gen AI hype dust settles.

An analogy I was thinking about as part of this blog series on how to secure LLMs was comparing how Harry Potter learns to practice Occlumency (aka mind control or microsegmentation) to defend against the LegiLiMens (aka LLM prompt injections) from stealing his thoughts and memories (aka our valuable data!). For better or worse, we do not live in a magical world. While that means that no one will be flying on a Nimbus2000 anytime soon, it does mean that there are very tangible things we can do to secure our applications in this new LLM-filled world. I’m cautiously optimistic with a solid plan in hand, and I hope after reading this series you are too.

Come talk to us if you would like to learn more about how Operant can bring runtime application protection and zero trust for APIs and Services to secure your LLMs!

3%20%3D(Art)Kubed%20(16%20x%209%20in)%20(7)-p-1080.avif)

.png)