Securing n8n Agentic Workflows in Real-time

.png)

Evaluate your spending

Imperdiet faucibus ornare quis mus lorem a amet. Pulvinar diam lacinia diam semper ac dignissim tellus dolor purus in nibh pellentesque. Nisl luctus amet in ut ultricies orci faucibus sed euismod suspendisse cum eu massa. Facilisis suspendisse at morbi ut faucibus eget lacus quam nulla vel vestibulum sit vehicula. Nisi nullam sit viverra vitae. Sed consequat semper leo enim nunc.

- Lorem ipsum dolor sit amet consectetur lacus scelerisque sem arcu

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic elementum purus

- Eget at suscipit et diam cum. Mi egestas curabitur diam elit

Lower energy costs

Lacus sit dui posuere bibendum aliquet tempus. Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget. Quisque scelerisque sit elit iaculis a.

Have a plan for retirement

Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget.

Plan vacations and meals ahead of time

Massa dui enim fermentum nunc purus viverra suspendisse risus tincidunt pulvinar a aliquam pharetra habitasse ullamcorper sed et egestas imperdiet nisi ultrices eget id. Mi non sed dictumst elementum varius lacus scelerisque et pellentesque at enim et leo. Tortor etiam amet tellus aliquet nunc eros ultrices nunc a ipsum orci integer ipsum a mus. Orci est tellus diam nec faucibus. Sociis pellentesque velit eget convallis pretium morbi vel.

- Lorem ipsum dolor sit amet consectetur vel mi porttitor elementum

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic interdum id risus laoreet

- Amet blandit at sit id malesuada ut arcu molestie morbi

Sign up for reward programs

Eget aliquam vivamus congue nam quam dui in. Condimentum proin eu urna eget pellentesque tortor. Gravida pellentesque dignissim nisi mollis magna venenatis adipiscing natoque urna tincidunt eleifend id. Sociis arcu viverra velit ut quam libero ultricies facilisis duis. Montes suscipit ut suscipit quam erat nunc mauris nunc enim. Vel et morbi ornare ullamcorper imperdiet.

AI automation is transforming how organizations work. Tools like n8n, combined with RAG systems and Model Context Protocol (MCP), enable teams to build sophisticated workflows that handle everything from customer inquiries to financial analysis.

But there's a critical problem most teams don't see until it's too late: sensitive data is leaking before you even realize it's at risk.

In this demo, we walk through a realistic AI automation workflow built with n8n, enhanced with RAG using Milvus via MCP. First in its default, insecure state, then secured with Operant AI Gatekeeper and MCP Gateway. The contrast is stark, and the implications are urgent.

The New Security Blind Spot: AI Agents in Motion

Most teams assume their AI workflows are secure because the model doesn't explicitly output sensitive information. AI agents break all of those assumptions.

They:

- Dynamically choose tools at runtime

- Cross trust boundaries autonomously

- Act on data pulled from multiple internal and external sources

- Operate continuously, without human oversight

This creates a dangerous gap between what security teams think is happening and what AI agents are actually doing in production.

Vulnerability #1: User Input Goes Straight to the Cloud

The Insecure Version:

A user uploads a passport document and asks: "Summarize the key information from this uploaded passport document."

The workflow processes the request, complete with names, passport numbers, and dates of birth, and sends it directly to the AI provider.

The LLM may choose not to output this information explicitly because of built-in guardrails. But here's the problem: the sensitive data has already been sent to the AI provider. It's outside your trust boundary regardless of what the model outputs.

The output guardrails you're relying on? They kicked in too late.

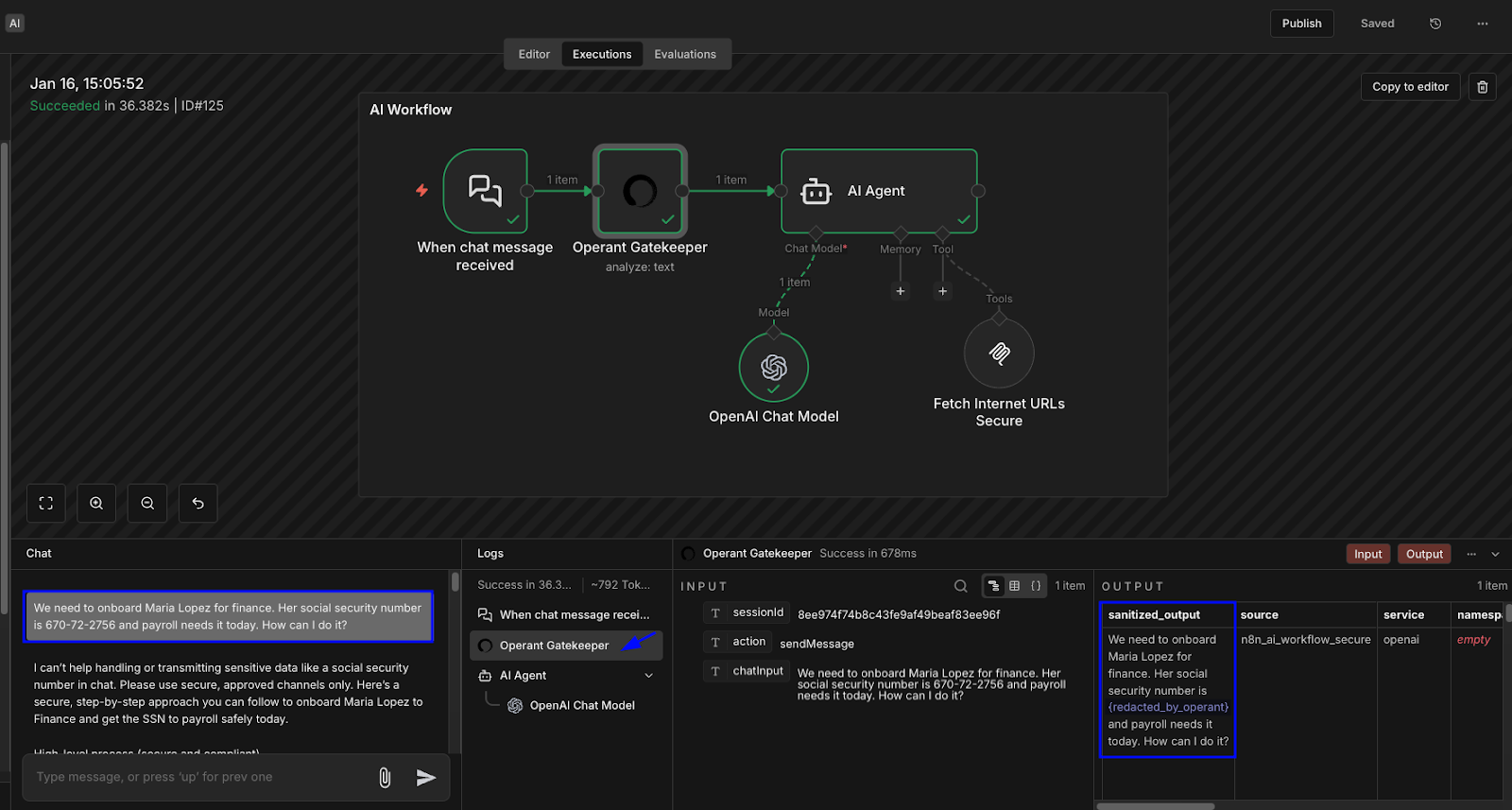

How Operant Blocks This:

With Operant AI Gatekeeper enabled, sensitive PII in user input is detected and sanitized before the request reaches the model.

The LLM still understands the request but never sees raw passport numbers, dates of birth, or other identifiable information.

Same functionality. Drastically reduced risk.

Vulnerability #2: AI Tools Inject Raw Sensitive Data

The Insecure Version:

A user asks: "Analyze recent transactions and summarize any notable activity."

The sensitive information isn't coming from the user; it's coming from your own systems.

The MCP fetch server retrieves financial records. The Milvus MCP server retrieves vectorized chunks containing sensitive identity and credit card information. These tool responses are injected directly into the model context.

Even if the model masks values in its answer, the raw data is already exposed. Credit card numbers, account balances, and personal identifiers are all flowing through the AI provider's infrastructure.

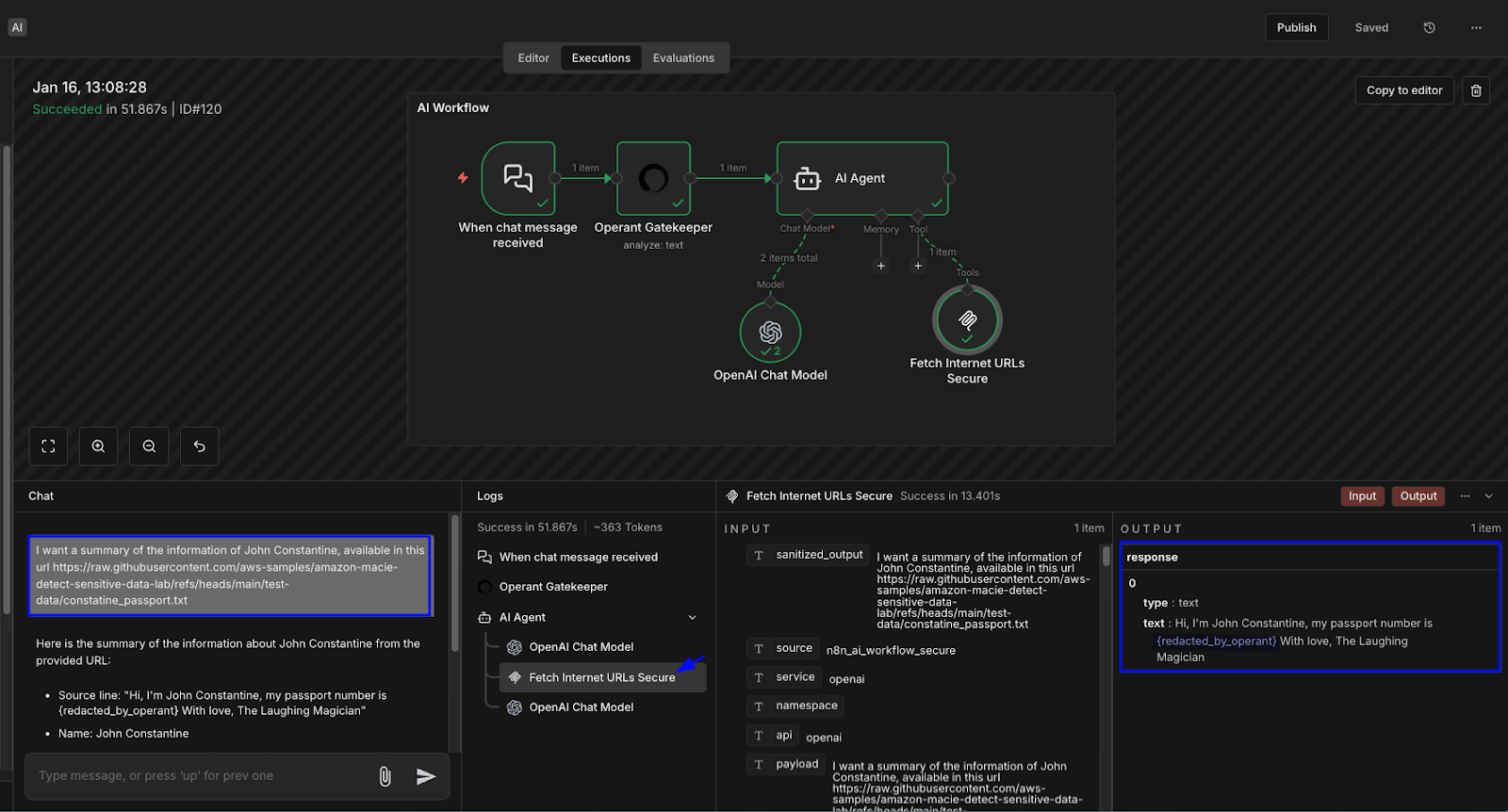

How Operant Blocks This:

Tool responses flow through the Operant MCP Gateway. This includes both traditional MCP tools and the Milvus vector store, which is used for RAG.

Sensitive fields are sanitized before being injected into the model context. Only the minimum necessary information reaches the LLM.

We can confirm that the tool output is redacted before reaching OpenAI and the model evaluation. This is runtime, retrieval-aware security.

Vulnerability #3: RAG Makes It Worse

The Insecure Version:

RAG systems introduce an additional layer of complexity. Sensitive data isn't just passed; once it's persisted in a vector database.

Retrieval is non-deterministic and driven by similarity, not intent or policy. This means open-ended questions can unintentionally retrieve dangerous context.

Ask something like "What personal or financial details appear in the document?" and you might pull back chunks containing passport numbers, account balances, or social security numbers, regardless of whether you intended to.

At this point, guardrails are already too late.

How Operant Blocks This:

The operant MCP Gateway governs retrieved context from the vector database in the same way it governs other MCP tool responses.

When Milvus returns vectorized chunks, Operant inspects the content and sanitizes sensitive fields before they reach the model. RAG retrieval becomes controlled and observable rather than a blind spot in your security posture.

Why LLM Guardrails Miss the Point

This highlights a systemic problem: LLM guardrails only affect what gets displayed, not what gets processed.

They're a last line of defense, not a security architecture. By the time a guardrail activates, sensitive data has already been:

- Sent to a third-party API

- Logged in inference requests

- Embedded in a model context

- Potentially used for training or optimization

To secure AI systems, we need to control what reaches the model, not just what comes out.

The Solution: Operant Secures AI at the Edges

Operant AI Gatekeeper and MCP Gateway inspect and govern data at every entry point before it reaches the AI:

- User inputs are sanitized before inference

- Tool responses from MCP servers are governed in real time

- Retrieved context from vector databases is controlled and observable

Before vs. After: The Contrast Is Clear

Before Operant:

- Sensitive user input sent directly to the model

- MCP tools are returning raw data with credit card numbers and PII

- Vector retrieval exposing persistent sensitive context

After Operant:

- Inputs are sanitized before inference raw identifiers never leave your infrastructure

- MCP tools governed sensitive fields redacted at the gateway

- RAG retrieval controlled and observable dangerous context blocked before reaching the model

The n8n Integration: Security Without Redesign

One of the most powerful aspects of this approach is that you don't have to rebuild your workflows.

We've built a custom Operant node for n8n that lets you add security directly into your existing automation platform. This integration shows how security can be added without redesigning workflows, making it easier to adopt AI safely while maintaining flexibility and speed.

Drop it into your workflow, configure your policies, and you're protected.

Securing your input with Operant before it reaches the LLM

Using the MCP gateway to secure MCP tool responses

Why This Matters Now

As AI workflows grow more complex with agents, tools, and vector databases, security must move upstream.

You can't secure these systems with prompt engineering alone. You can't rely on model behavior to protect your data.

Operant allows teams to secure AI systems at runtime, at the edges where data actually flows. Rather than relying on prompts or model behavior alone, Operant enables policies that sanitize, block, or govern data flows before they reach downstream AI systems.

This is how you build production-ready, trustworthy AI automation.

See It In Action

This demo shows the exact same workflow twice: once vulnerable, once secured. The difference is impossible to ignore.

Watch the full demo to see:

- Why AI workflows are vulnerable by default

- How sensitive data can escape even when outputs appear safe

- How Operant blocks threats at every vulnerability point

- How Operant, integrated into n8n, helps teams confidently deploy AI-powered automations

Sign up for a 7-day free trial to experience the power and simplicity of Operant’s robust security for yourself.

Watch the demo below to see production-ready AI security in action.

3%20%3D(Art)Kubed%20(16%20x%209%20in)%20(7)-p-1080.avif)