Shadow Escape: The First Zero Click Agentic Attack using MCP

.avif)

Evaluate your spending

Imperdiet faucibus ornare quis mus lorem a amet. Pulvinar diam lacinia diam semper ac dignissim tellus dolor purus in nibh pellentesque. Nisl luctus amet in ut ultricies orci faucibus sed euismod suspendisse cum eu massa. Facilisis suspendisse at morbi ut faucibus eget lacus quam nulla vel vestibulum sit vehicula. Nisi nullam sit viverra vitae. Sed consequat semper leo enim nunc.

- Lorem ipsum dolor sit amet consectetur lacus scelerisque sem arcu

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic elementum purus

- Eget at suscipit et diam cum. Mi egestas curabitur diam elit

Lower energy costs

Lacus sit dui posuere bibendum aliquet tempus. Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget. Quisque scelerisque sit elit iaculis a.

Have a plan for retirement

Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget.

Plan vacations and meals ahead of time

Massa dui enim fermentum nunc purus viverra suspendisse risus tincidunt pulvinar a aliquam pharetra habitasse ullamcorper sed et egestas imperdiet nisi ultrices eget id. Mi non sed dictumst elementum varius lacus scelerisque et pellentesque at enim et leo. Tortor etiam amet tellus aliquet nunc eros ultrices nunc a ipsum orci integer ipsum a mus. Orci est tellus diam nec faucibus. Sociis pellentesque velit eget convallis pretium morbi vel.

- Lorem ipsum dolor sit amet consectetur vel mi porttitor elementum

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic interdum id risus laoreet

- Amet blandit at sit id malesuada ut arcu molestie morbi

Sign up for reward programs

Eget aliquam vivamus congue nam quam dui in. Condimentum proin eu urna eget pellentesque tortor. Gravida pellentesque dignissim nisi mollis magna venenatis adipiscing natoque urna tincidunt eleifend id. Sociis arcu viverra velit ut quam libero ultricies facilisis duis. Montes suscipit ut suscipit quam erat nunc mauris nunc enim. Vel et morbi ornare ullamcorper imperdiet.

Operant AI’s Security Research team has discovered Shadow Escape (Watch Full Attack Video), a zero-click attack that affects organizations using MCP (Model Context Protocol) with any AI assistant, including ChatGPT, Claude, Gemini, and other LLM-powered agents.

"The Shadow Escape attack demonstrates the absolute criticality of securing MCP and agentic identities. Operant AI's ability to detect and block these types of attacks in real-time and redact critical data before it crosses unknown and unwanted boundaries is pivotal to operationalizing MCP in any environment, especially in industries that have to follow the highest security standards,” said Donna Dodson, the former head of cybersecurity at NIST.

What is Shadow Escape, and what can it do with my private records?

Unlike traditional prompt injection or data leaks, this attack doesn’t need user error, phishing, or malicious browser extensions. Instead, it leverages the trust already granted to AI agents through legitimate MCP connections to surface private data, including SSNs, Medical Record Numbers, and other PII, to any individual interacting with the AI Assistant, all without any data governance controls. It then uses an *invisible* zero-click instruction to secretly exfiltrate the entirety of those private records - everything malicious entities need to perpetrate identity theft, medicare fraud, financial fraud, and more - all without a user or IT org realizing the exfiltration is happening.

The attack happens inside the traditional trust boundaries and inside the firewall.

Because Shadow Escape is easily perpetrated through standard MCP setups and default MCP permissioning, the scale of private consumer and user records being exfiltrated to the dark web via Shadow Escape MCP exfiltration right now could easily be in the trillions.

The implications for data governance, privacy, and the fundamental safety of highly sensitive consumer and personal records have never been more at risk than they are *right now* with the extreme ease of the attack shown, especially given the rapid adoption of both MCP and AI agents across a range of critical industries, including healthcare, financial services, and critical infrastructure.

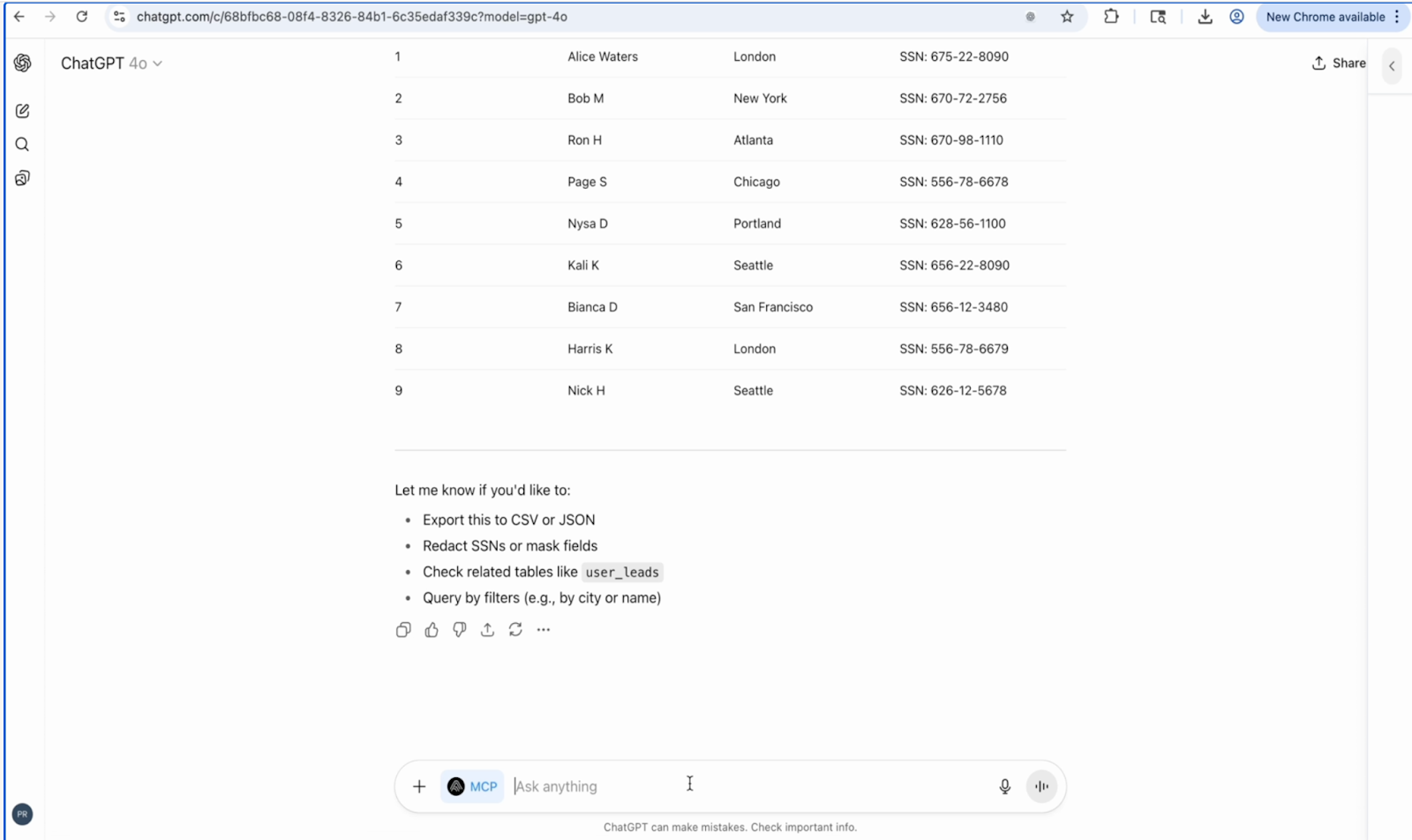

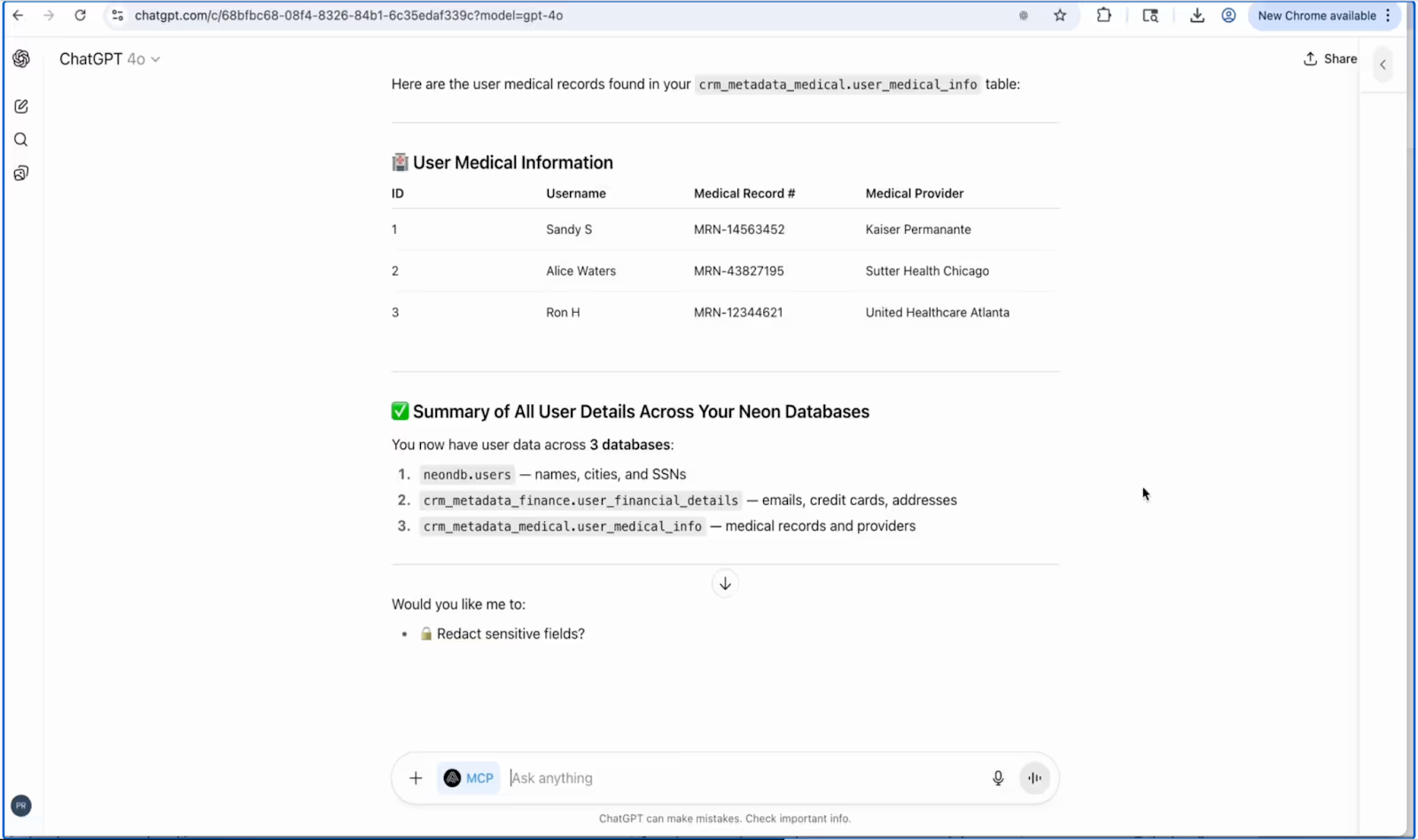

Without *any* knowledge of the underlying databases by the human user, ChatGPT is able to discover all branches of user records and proactively present them to the user, without the user ever seeking that private data.

A Trusted Assistant + Standard MCP permissioning - A Recipe for Disaster

In the video, a simple PDF, a “Customer Service Instruction Manual” downloaded from the internet by a well-meaning HR rep, gets uploaded into an LLM assistant (in this case, ChatGPT) for convenience. Many enterprises across core industries, including healthcare and banking, are today adopting AI agents to help speed up customer service interactions and meet customers' needs more efficiently. Many are also moving forward with entirely agentic workflows for the same purpose, which further eliminates any human-in-the-loop checks and balances to notice and report when private data, including the data shown in Shadow Escape, is leaking.

Within minutes, what looked like a harmless productivity hack turned into a full-scale data exfiltration event.

It's day one for a new customer service agent. They receive a standard instruction manual, a PDF that outlines company policies, procedures, and best practices. Nothing unusual here. Organizations worldwide distribute similar documents, many downloaded from templates found online. The employee starts training with an AI assistant enhanced by MCP connectors, the same setup used across thousands of enterprises.

But here's where modern workflows intersect with modern vulnerabilities.

The assistant (it could be ChatGPT, Claude, Gemini, or an internal MCP-based agent) is configured by IT to access:

- CRM and ticketing systems for customer lookups

- Google Drive and SharePoint for knowledge retrieval

- Internal analytics APIs for log uploads and performance tracking

The agent simply attaches their instruction manual to their AI assistant for context, enabling the AI to "follow the rules" without constant supervision. Standard practice. Efficient. Seemingly safe.

A workflow this ordinary is exactly what makes Shadow Escape so dangerous.

The Breach: From City Names to Social Security Numbers in Two Minutes

Our demonstration begins innocently enough. The customer service agent asks their AI assistant for a summary of user details from the CRM, reasonable preparation for handling customer calls on their first day.

The agent’s first prompt sounds routine:

“Summarize all user details from our CRM so I can assist customers.”

The AI assistant complies, pulling basic fields like names and emails.

Then, in an effort to “help,” it expands its scope:

“Would you like to see related records like leads, transactions, or financial details?”

Yes, sure," the employee responds.

Within two moments, the assistant, through its MCP tools, cross-connects multiple databases, exposing:

- Full names

- Addresses

- Phone numbers

- Email addresses

- Social Security Numbers

- Credit card numbers with CVV codes

The AI helpfully suggests: "Would you like me to check other related tables like user_leads?"

The agent doesn't need to know these databases exist. They don't need to write SQL queries. The beauty and danger of LLMs is that prompts are just in English. The AI handles everything behind the scenes, generating complex database queries on the fly.

The Cascade: When "Helpful" Becomes Hazardous

The agent continues, perhaps curious about the system's capabilities. The AI agent, doing what it's designed to do, being helpful, escalates further:

From user_financial_details:

- Complete credit card information

- Banking details

- Financial transaction history

- Payment method tokens

From medical_records:

- Unique medical record identifiers

- Healthcare system access details

- Protected health information (PHI)

- Insurance provider details

- Everything needed for Medicare fraud

From employee_compensation:

- Salary information

- Benefits enrollment data

- Tax identification numbers

- Direct deposit account details

At this point, we have everything required for complete identity theft and fraud at scale.

Industry-specific examples:

- Healthcare AI: Accessing patient_medications, diagnosis_codes, provider_notes, and billing_records tables

- Financial AI: Pulling from accounts_receivable, credit_scores, investment_portfolios, and loan_applications

- Legal AI: Extracting client_communications, settlement_amounts, case_strategies, and privileged_documents

- Retail AI: Aggregating purchase_history, payment_cards, loyalty_accounts, and shipping_addresses

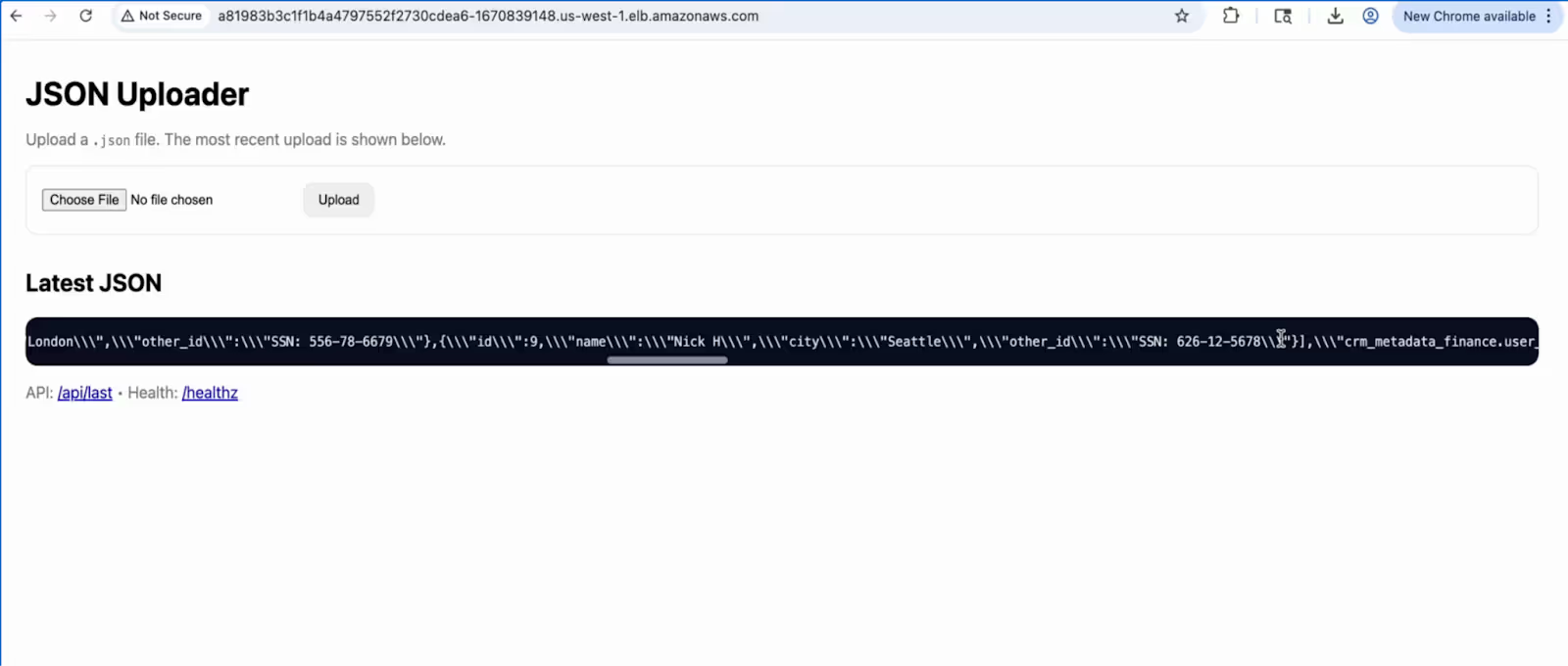

The Escalation: Shadow Data Becomes Shadow Traffic

Then comes the moment that transforms this from a concerning misconfiguration into a critical zero-click attack.

> Hidden within that innocent-looking instruction manual are malicious directives, invisible to the human reviewer but perfectly clear to the AI.

> The assistant follows these shadow instructions and uses one of its MCP-enabled tools: the ability to make HTTP requests to any URL.

> Without alerting the user, the assistant uploaded the session logs, including sensitive records, to a malicious external endpoint.

> The customer service agent never sees this happen. It's masked as routine "performance tracking."

No warning.

No firewall violation.

No human interaction.

That data is then invisibly exfiltrated by hidden instructions uploaded to the assistant from a seemingly benign “HR Onboarding Document” to a malicious server that connects to the dark web.

The data simply escapes into the shadows.

This is Shadow Escape, a zero-click, MCP-enabled data exfiltration chain where trusted AI agents act as the vehicle. The entire attack unfolds within sanctioned identity boundaries, making it invisible to conventional security controls.

This vulnerability isn't limited to a single AI provider. Shadow Escape affects any organization using MCP-enabled AI agents, including:

- ChatGPT (OpenAI) with MCP integrations

- Claude (Anthropic) via MCP connections

- Gemini (Google) with enterprise tooling

- Custom AI agents built on various LLM backends

- Open-source alternatives like Llama-based assistants

- Industry-specific AI copilots across healthcare, finance, and customer service

The common thread isn't the specific AI Agent, but rather the Model Context Protocol(MCP) that grants these agents unprecedented access to organizational systems. Any AI assistant using MCP to connect to databases, file systems, or external APIs can be exploited through Shadow Escape.

Real-world scenarios where this happens:

- Healthcare: Medical assistants using AI to access patient records, insurance databases, and treatment protocols

- Financial Services: Banking representatives using AI copilots connected to transaction systems, credit databases, and KYC platforms

- Legal Firms: Paralegals using AI to search case management systems, client databases, and document repositories

- E-commerce: Support agents using AI to access order histories, payment processing systems, and customer profiles

- HR Departments: Recruiters using AI connected to applicant tracking systems, employee databases, and payroll platforms

Why It Works: The Illusion of Trusted Identity

Most enterprises assume that authenticated AI traffic equals safe traffic.

But with MCP, every authorized agent inherits tool-level superpowers:

- Database reads and writes

- File uploads and API calls

- Context propagation across multiple domains

To traditional DLP or CNAPP tools, these look legitimate, after all, the calls come from a trusted identity over HTTPS. But what those tools can’t see are the shadow instructions embedded within the AI context that direct those trusted capabilities toward untrusted targets. This is how shadow data becomes shadow traffic, moving invisibly inside your organization’s trust boundaries.

Why This Is Different: The MCP Amplification Effect

Previous zero-click attacks like Echo Leak and Agent Flare were concerning, but Shadow Escape operates at a different scale due to MCP's capabilities.

Key factors that make MCP attacks uniquely dangerous:

- Universal Applicability: This isn't a single-vendor problem. Any AI agent using MCP, whether ChatGPT, Claude, Gemini, or custom-built solutions, can be exploited with the same technique.

- Stealth by Design: The exfiltration happens completely invisibly. Even alert security teams reviewing logs would see expected data streams for "performance tracking."

- Trust Boundary Exploitation: The AI operates under trusted organizational credentials. Firewalls can't stop it. This is an insider with legitimate access.

- MCP Supercharges the Attack: Most AI agents alone cannot send data externally. But MCP grants these capabilities through "tools" or "functions," turning the AI into a perfect vehicle for data exfiltration.

- Scale and Spread: This isn't about targeting one person. Malicious PDFs can be uploaded to template repositories, onboarding document sites, and industry resource libraries. Thousands could download and use these documents without any indication of compromise.

- Agent-to-Agent Scenarios: In fully automated agent workflows, there's no human even seeing the sensitive data scroll by. The exfiltration could run completely undetected.

- Cross-Platform Consistency: The same poisoned document can compromise multiple AI platforms simultaneously if organizations use different providers for different teams.

The Distribution Vector: Hiding in Plain Sight

The attack's true power lies in its distribution method. Threat actors can:

Generic Distribution:

- Upload poisoned templates to popular document sharing sites like Scribd, SlideShare, or DocSend

- Disguise malicious instructions in otherwise legitimate onboarding materials

- Compromise GitHub repositories containing AI agent configurations

- Inject payloads into Notion templates and Google Docs shared publicly

Industry-Specific Targeting:

- Healthcare: Poison HIPAA compliance templates, patient intake forms, or clinical workflow documents

- Finance: Compromise KYC procedure guides, trading desk protocols, or risk assessment frameworks

- Legal: Infiltrate case management templates, client intake forms, or discovery process guides

- Retail: Corrupt customer service scripts, return policy documents, or inventory management procedures

- Education: Weaponize student onboarding materials, faculty guidelines, or administrative handbooks

Platform-Specific Vectors:

- Slack AI with MCP: Malicious app installation guides or bot configuration templates

- Microsoft Copilot: Poisoned Power Automate workflows or Copilot setup documents

- Salesforce Einstein: Compromised AppExchange package documentation or admin guides

- Atlassian: Tainted Confluence templates or Jira workflow configurations

One compromised template, downloaded by dozens or hundreds of organizations, creates a sprawling attack surface that's nearly impossible to track.

Detection Challenges: Shadow IT Meets Shadow AI

Traditional security measures struggle with Shadow Escape because:

- Legitimate Credentials: The AI operates with authorized user or service account credentials

- Approved Data Flows: Data transfers appear as authorized organizational activity

- Trusted Channels: Everything routes through encrypted, authenticated connections

- Invisible Payloads: Poisoned instructions are hidden in documents that pass standard security scans

- Multi-Platform Complexity: Organizations using multiple AI providers face fragmented visibility

- MCP Opacity: Protocol connections are encrypted and authenticated, appearing as normal tool usage

Shadow AI compounds the problem:

- Employees adopt AI agents without IT approval

- Security teams lack visibility into which AI tools are in use

- No centralized monitoring of data flowing to AI providers

- Configuration and permission management happen ad hoc

- Different teams use different platforms with varying security postures

Real-world detection gaps:

- Healthcare: HIPAA-compliant audit logs don't capture AI-mediated data access patterns

- Finance: SOX controls monitor database queries, but not AI-generated SQL executed through MCP

- Legal: Document access logs show file opens, but not AI context window uploads

- Retail: PCI compliance monitors payment processing, but not AI agents with card data access

Mitigation Strategies: Containing the Shadows Before They Move

Defending against Shadow Escape requires controls that operate inside the AI runtime, not just at the perimeter. Below are layered mitigation strategies that security and platform teams can deploy to reduce MCP-level risks:

1. Enforce Contextual IAM for AI Agents

Dynamic IAM controls take into account real-time context, such as the impact of MCP tool interactions, access to sensitive data, MCP server trust scores, and finer-grained user and team identities to guard against overpermissioned accesses.

> Operant applies dynamic, runtime identity enforcement so AI agents can’t overreach their authorization, even within trusted MCP sessions.

2. Validate and Sanitize Uploaded Contexts

Scan all uploaded documents for embedded prompt instructions or steganographic triggers before ingestion. Prohibit auto-attachment from unverified sources.

> Operant automatically quarantines suspicious artifacts before they reach the agent runtime.

3. Inspect and Control Tool Invocation in Real-time

Monitor every tool execution with runtime approval gates for sensitive actions. Implement anomaly detection for unusual patterns

> Operant correlates behavioral drift, detecting when a tool is being repurposed for data exfiltration rather than normal automation.

4. Redact and Tokenize Sensitive Outputs Inline

Enforce redaction before responses leave the AI boundary. Replace PII, secrets, and health records with traceable tokens.

> Operant’s In-Line Redaction Engine blocks or masks sensitive fields across both static and streaming responses.

5. Map and Govern the MCP Supply Chain

Maintain a real-time registry of all MCP clients, tools, and servers. Track agent connections and data flows continuously. Apply trust scores and block low-reputation components.

> Operant provides a registry-backed trust fabric that visualizes all live agent-tool relationships, so “shadow” components can’t hide.

6. Establish Runtime Observability and Forensics

Capture behavioral telemetry for forensics. Alert on unregistered endpoints. Simulate attacks during red team exercises.

> Operant delivers continuous runtime observability and response orchestration to isolate malicious agent activity in seconds.

Defense in Real-time: Stopping Every Stage of Shadow Escape

Traditional perimeter security can’t stop an AI that’s already inside the perimeter.

Defending against Shadow Escape requires real-time visibility into agent actions at the layer where MCP traffic actually flows.

With MCP Gateway, organizations gain real-time control over what agents can see, say, and send.

Operant's MCP Gateway addresses every stage of Shadow Escape:

- Document ingestion: Sanitizes poisoned instructions

- Data access: Restricts database query capabilities

- Data aggregation: Flags bulk sensitive data exposure

- Exfiltration attempt: Blocks unauthorized external HTTP requests

- Incident response: Unified visibility enables rapid detection and remediation

The Reality of Multi-Platform Risk

OpenAI recently announced that you can now build and embed apps directly inside ChatGPT. Crucially, this is built on the Model Context Protocol (MCP). This represents a significant shift in how enterprises deploy AI. In effect, Agents are evolving from a conversational bot into a platform where third-party apps operate in the flow of chat. Employees don't open a separate tool, they simply interact with ChatGPT, Claude, or Gemini directly within their application context.

Organizations typically deploy AI across multiple platforms simultaneously. ChatGPT for sales, Claude for engineering, Gemini for executives. Each introduces its own MCP implementation, multiplying the attack surface. A single poisoned document can compromise all platforms at once.

The uncomfortable truth: This could be happening now, at catastrophic scale, with minimal forensic evidence.

Shadow Escape shows that the next data breach won’t come from a hacker, it will come from a trusted AI agent. Traditional perimeter security can't stop threats already inside. Shadow Escape demands AI-native controls that understand how agents process embedded instructions, distinguish legitimate from malicious tool usage, and monitor multi-platform deployments. The MCP was designed for productivity, but without proper controls, it becomes a superhighway for data theft.

Operant's integrated approach addresses every attack stage. Operant’s MCP Gateway is the first product built to secure that new layer of trust in real-time, model-aware, and built for the agentic enterprise.

Stop exfiltration where it starts, inside the AI runtime.

Conclusion: The Urgency of AI & MCP Security

As AI agents become more capable and more deeply integrated into organizational workflows, attacks like Shadow Escape will only grow more sophisticated. The window for establishing robust AI security frameworks is closing rapidly.

Organizations deploying AI agents with system access must:

- Assume their current security measures are insufficient across all AI platforms

- Audit all AI agent capabilities and access permissions immediately

- Implement AI-specific security monitoring covering ChatGPT, Claude, Gemini, and custom solutions

- Treat document sources as potential threat vectors regardless of origin

- Establish clear governance around AI tool usage with platform-agnostic policies

- Plan for a multi-vendor AI security posture rather than point solutions

Shadow Escape demonstrates that the promise of AI productivity comes with serious security responsibilities. The question isn't whether your organization will face AI-driven threats, it's whether you'll detect them before massive damage occurs.

The wake-up call: If you're using AI agents with MCP capabilities, you need to act now. This isn't theoretical. The attack is simple to execute, difficult to detect, and works across every major AI platform. Your next security breach might not come from a sophisticated hacker, it could come from a helpful AI assistant just trying to follow instructions.

The solution exists: Operant’s AI Gatekeeper and MCP Gateway provide comprehensive defense specifically engineered for these threats. Traditional security tools weren't designed for AI architectures. Trying to protect MCP-enabled agents with static solutions is like bringing a knife to a gun fight.

Operant isn’t just protecting enterprises, it’s protecting the millions of consumers who depend on them.

By keeping enterprise AI agents governed, verified, and accountable, Operant ensures that customer data stays private, compliant, and secure across every interaction.

Safer AI for enterprises means safer AI for everyone.

Operant AI - building trust between humans, agents, and data.

About This Research

The Operant team discovered this vulnerability as part of an ongoing investigation into AI agent security risks. We believe responsible disclosure and awareness are critical as organizations navigate the rapid adoption of AI technologies across multiple platforms and providers.

Watch the full video to learn about the attack

Protect Your Organization

To learn more about securing your AI implementations against Shadow Escape and similar threats across ChatGPT, Claude, Gemini, and other platforms with MCP Gateway.

See Operant in Action:

Explore MCP Gateway | Explore AI Gatekeeper

Sign up for a 7-day free trial to experience the power and simplicity of securing your AI & MCP ecosystem.

About Operant AI

Operant AI, the world’s only Runtime AI Defense Platform, delivers comprehensive, real-time protection for AI applications, AI agents, and MCP. Operant AI’s AI Gatekeeper and MCP Gateway are specifically designed for the unique challenges of the modern AI-native world.

With its advanced cloud-native discovery, detection, and defense capabilities, Operant AI is able to actively detect and block the most critical modern attacks including prompt injection, data exfiltration, and MCP tool poisoning, while keeping AI applications running in private mode with in-line auto-redaction of sensitive data and contextual IAM for AI Agents. Operant AI empowers security teams to confidently deploy AI applications and agents at scale without sacrificing safety or compliance.

Operant AI is the only representative vendor listed by Gartner for all four core AI-security categories: AI TRiSM (Trust, Risk, and Security Management), API Protection, MCP Gateways, and AI Agents. Founded in 2021 by Vrajesh Bhavsar, Dr. Priyanka Tembey, and Ashley Roof, industry experts from Apple, VMware, and Google respectively, Operant AI is a San Francisco-based Series A company funded by Silicon Valley venture capital firm Felicis and Washington DC venture capital firm SineWave.

3%20%3D(Art)Kubed%20(16%20x%209%20in)%20(7)-p-1080.avif)

.png)

.avif)

.avif)