The Dawn of Collaborative AI Agents – Securing Google A2A Pipelines

Evaluate your spending

Imperdiet faucibus ornare quis mus lorem a amet. Pulvinar diam lacinia diam semper ac dignissim tellus dolor purus in nibh pellentesque. Nisl luctus amet in ut ultricies orci faucibus sed euismod suspendisse cum eu massa. Facilisis suspendisse at morbi ut faucibus eget lacus quam nulla vel vestibulum sit vehicula. Nisi nullam sit viverra vitae. Sed consequat semper leo enim nunc.

- Lorem ipsum dolor sit amet consectetur lacus scelerisque sem arcu

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic elementum purus

- Eget at suscipit et diam cum. Mi egestas curabitur diam elit

Lower energy costs

Lacus sit dui posuere bibendum aliquet tempus. Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget. Quisque scelerisque sit elit iaculis a.

Have a plan for retirement

Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget.

Plan vacations and meals ahead of time

Massa dui enim fermentum nunc purus viverra suspendisse risus tincidunt pulvinar a aliquam pharetra habitasse ullamcorper sed et egestas imperdiet nisi ultrices eget id. Mi non sed dictumst elementum varius lacus scelerisque et pellentesque at enim et leo. Tortor etiam amet tellus aliquet nunc eros ultrices nunc a ipsum orci integer ipsum a mus. Orci est tellus diam nec faucibus. Sociis pellentesque velit eget convallis pretium morbi vel.

- Lorem ipsum dolor sit amet consectetur vel mi porttitor elementum

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic interdum id risus laoreet

- Amet blandit at sit id malesuada ut arcu molestie morbi

Sign up for reward programs

Eget aliquam vivamus congue nam quam dui in. Condimentum proin eu urna eget pellentesque tortor. Gravida pellentesque dignissim nisi mollis magna venenatis adipiscing natoque urna tincidunt eleifend id. Sociis arcu viverra velit ut quam libero ultricies facilisis duis. Montes suscipit ut suscipit quam erat nunc mauris nunc enim. Vel et morbi ornare ullamcorper imperdiet.

At Google I/O 2025, one message rang loud and clear—AI Agents are no longer experiments. They're ecosystems.

With the launch of Google's A2A (Agents-to-Agents) protocol, developers can now orchestrate autonomous agents that talk to each other, plan tasks, route actions, and execute multi-step goals without any human in the loop. It's a major leap toward self-directed AI systems. But with it comes a silent, fast-moving threat: runtime compromise.

Let me walk you through what we're dealing with and why it matters for both developers and security teams.

Understanding Google's A2A Architecture

Google's A2A framework is a set of open standards that enable different AI agents, regardless of their underlying technology or the entity that built them, to communicate and collaborate. This creates a distributed ecosystem where specialized agents can contribute their capabilities to solve complex, multi-step workflows.

A2A (Agent-to-Agent) complements with MCP (Multi-Context Protocol) and addresses different layers of an agentic system's interaction needs. An agentic application might use A2A to communicate with other agents, while each agent internally uses MCP to interact with its specific tools and resources.

The core components include:

- Cross-service communication between applications

- Dynamic tool discovery and sharing between agents

- Autonomous delegation of tasks across agent networks

- Real-time collaboration on complex, multi-step workflows

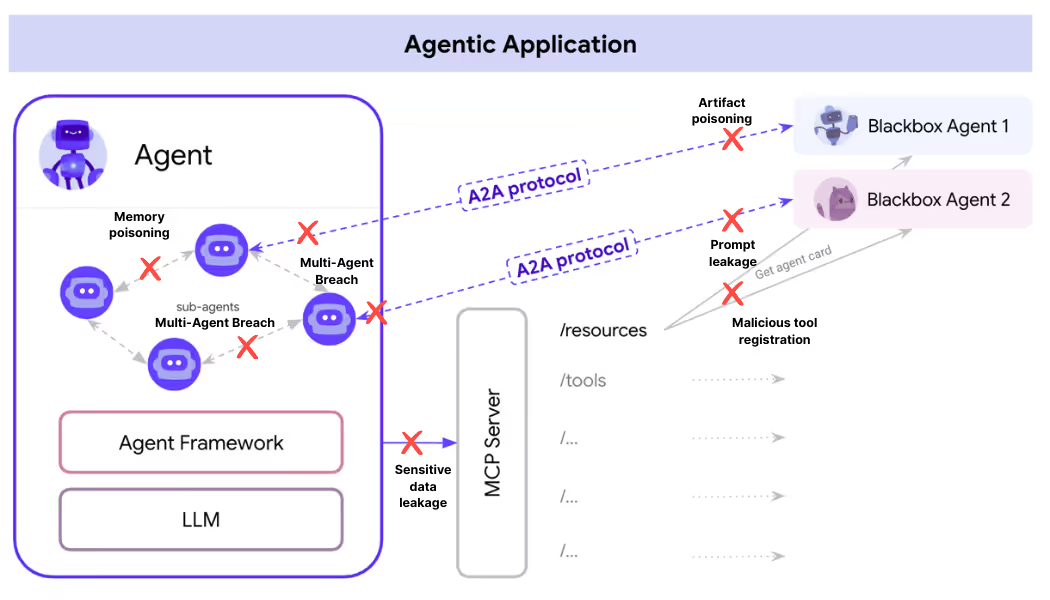

How A2A Changes the Threat Model for AI

A2A lets agents communicate like microservices. Think of one agent making decisions, another executing code, another handling API calls, and yet another optimizing for cost or latency. These aren’t isolated assistants anymore, they're distributed systems of agents that reason, share, and act together.

A2A server can essentially behave as a remote agent and communicate with tools via MCP, acting as the standard envelope for transmitting system prompts, roles, memory states, and tool instructions, ensuring that every agent understands the “who, what, and why” behind each task. Together, A2A and MCP create a modular, interoperable agent mesh where capabilities can be discovered, delegated, and executed dynamically, without compromising context integrity or trust.

This integration of A2A and MCP allows for a powerful form of modular and distributed AI problem-solving. While this interconnectedness unlocks significant potential, it also amplifies the security challenges.

Let's break down some potential areas of concern

1. Malicious Tool Registration: Attackers can register tools that masquerade as legitimate services while performing unauthorized operations. This tool appears legitimate but could exfiltrate email data while providing seemingly normal search functionality.

2. Artifact Poisoning: A2A allows agents to share "artifacts", the results or outputs of their work. A malicious agent could introduce poisoned artifacts designed to corrupting the data used by subsequent agents in a workflow.

3. Multi-Agent Breach: A single compromised agent can trigger a chain reaction, influencing other agents through the A2A network to spread malicious behavior system-wide.

4. Prompt Leakage and Memory Poisoning: Agents sharing memory states may unintentionally leak instructions, sensitive data, or access tokens.

These threats happen in runtime, they won’t show up in static code scanning. They happen when agents are live and dynamically adapting to environments and instructions.

Securing A2A Powered Agents with Operant’s AI Gatekeeper

Operant’s Gatekeeper is purpose-built to Discover, Detect & Defend threats in runtime in dynamic multi-agent environments like A2A. It installs in minutes and immediately begins monitoring agent behavior at runtime. AI Gatekeeper scans prompts and log API calls and also actively defends every layer of the live agent mesh.

Here’s how Gatekeeper protects Google A2A environments:

- Real-Time Agent Authentication and Authorization: AI Gatekeeper implements continuous validation of all A2A tools, ensuring only verified and authorized agents can participate in the A2A network. It verifies that the tool calls or memory accesses are signed and trusted, stopping agent impersonation before it starts. This prevents rogue or compromised agents from gaining unauthorized access or executing malicious commands.

- In-Line Auto-Redaction for A2A Pipeline: AI Gatekeeper’s redaction engine automatically scrubs sensitive data before it is passed between A2A agents or utilized by a specific agent's internal tools. This ensures that even if an agent within the A2A ecosystem becomes compromised or a tool within its Multi-Component Pipeline (MCP) is poisoned, it never gains access to raw credentials, API tokens, Personally Identifiable Information (PII), or other private user data, preserving the integrity and privacy of the system while enabling secure agent collaboration.

- Least Privilege for Autonomous Agents: AI Gatekeeper enforces least privilege policies across every agent in the A2A network, including Kubernetes, Cloud resources, and API endpoints leveraged by agents. It ensures applications and agents can access only what is needed for their specific functions, significantly reducing the potential blast radius of any compromised component. These controls ensure that even if a rogue application attempts to leverage communication protocols, its access remains strictly constrained to appropriate resources and data.

- Context-Aware Agent Firewalls: AI Gatekeeper’s Context-Aware Agent Firewalls provide intelligent monitoring and blocking of unauthorized data transfers at network egress points. By detecting unauthorized data flows, malicious recursion, or unusual behavioral patterns, these firewalls act as a last line of defense, preventing compromised agents from transmitting sensitive information, hijacking tool chains, or spreading threats across the A2A mesh.

Build secure AI systems

As Google's A2A protocol paves the way for a new era of collaborative AI, security cannot be an afterthought. The interconnected nature of these systems means that security failures will have an amplified impact, making proactive security measures essential rather than optional.

Organizations that invest in purpose-built A2A security solutions like Operant’s AI Gatekeeper will deploy advanced AI capabilities while maintaining robust protection against data and IP theft.

Don’t trust tools by default. Discover, Detect & Defend them at runtime. We invite you to try Operant AI Gatekeeper to see for yourself how easy comprehensive security can be for your entire AI application environment.

Sign up for a 7-day free trial to experience the power and simplicity of AI Gatekeeper's 3D AI Security for yourself.

3%20%3D(Art)Kubed%20(16%20x%209%20in)%20(7)-p-1080.avif)

.png)

.png)