Three Overlooked but Critical Security Gaps Caused by 3rd Party APIs and Services

Evaluate your spending

Imperdiet faucibus ornare quis mus lorem a amet. Pulvinar diam lacinia diam semper ac dignissim tellus dolor purus in nibh pellentesque. Nisl luctus amet in ut ultricies orci faucibus sed euismod suspendisse cum eu massa. Facilisis suspendisse at morbi ut faucibus eget lacus quam nulla vel vestibulum sit vehicula. Nisi nullam sit viverra vitae. Sed consequat semper leo enim nunc.

- Lorem ipsum dolor sit amet consectetur lacus scelerisque sem arcu

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic elementum purus

- Eget at suscipit et diam cum. Mi egestas curabitur diam elit

Lower energy costs

Lacus sit dui posuere bibendum aliquet tempus. Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget. Quisque scelerisque sit elit iaculis a.

Have a plan for retirement

Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget.

Plan vacations and meals ahead of time

Massa dui enim fermentum nunc purus viverra suspendisse risus tincidunt pulvinar a aliquam pharetra habitasse ullamcorper sed et egestas imperdiet nisi ultrices eget id. Mi non sed dictumst elementum varius lacus scelerisque et pellentesque at enim et leo. Tortor etiam amet tellus aliquet nunc eros ultrices nunc a ipsum orci integer ipsum a mus. Orci est tellus diam nec faucibus. Sociis pellentesque velit eget convallis pretium morbi vel.

- Lorem ipsum dolor sit amet consectetur vel mi porttitor elementum

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic interdum id risus laoreet

- Amet blandit at sit id malesuada ut arcu molestie morbi

Sign up for reward programs

Eget aliquam vivamus congue nam quam dui in. Condimentum proin eu urna eget pellentesque tortor. Gravida pellentesque dignissim nisi mollis magna venenatis adipiscing natoque urna tincidunt eleifend id. Sociis arcu viverra velit ut quam libero ultricies facilisis duis. Montes suscipit ut suscipit quam erat nunc mauris nunc enim. Vel et morbi ornare ullamcorper imperdiet.

Cloud-native applications can’t be completely isolated from outside influences, cocooned nicely and protected by a hard shell, so why do we focus our security so much on securing the outer layers?

Sure, having a hard shell can help, but almost all modern applications need to have at least some interactions with external parties, whether it’s 3rd party APIs like Stripe, Twilio, or Okta or 3rd party containers from a variety of solutions the application logic depends on. But the truth is that regardless of how hardened the outer shell of an app might seem, every external interaction for a cloud-native application opens up new possibilities for attackers to penetrate into your application environment and wreak havoc on the squishy, unprotected internals.

We often talk to companies who understand that firewalls and other old world security tools aren’t enough to secure the constantly shifting insides of their modern cloud-native applications, but when we dig deeper, we find that many teams lack a full understanding of exactly how much the dynamic nature of cloud-native traffic flows between application internals and 3rd parties creates major attack vectors that can’t be secured by run-of-the-mill solutions like API endpoint testing or infra-layer dashboards.

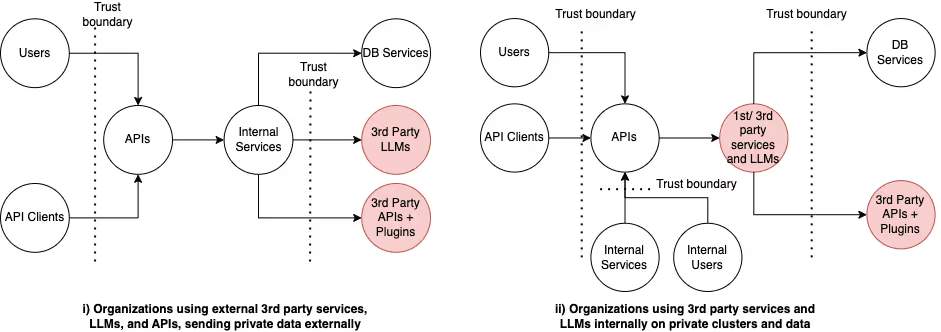

Based on the common 3rd party software and API usage patterns, we need to architect the appropriate trust boundaries. What applications actually need is security enforcement within the internals, so that they can do what they were built to do - including interfacing with 3rd parties - without the gaping security holes that open up when external traffic flows are initiated without proper security as part of the deployment.

Here are three ways that 3rd party interactions create open attack vectors and some solutions to help you harden your application internals to contain open risk and reduce the blast radius of attempted attacks.

1. Security Gaps Created by Internal API endpoints, beyond public endpoints

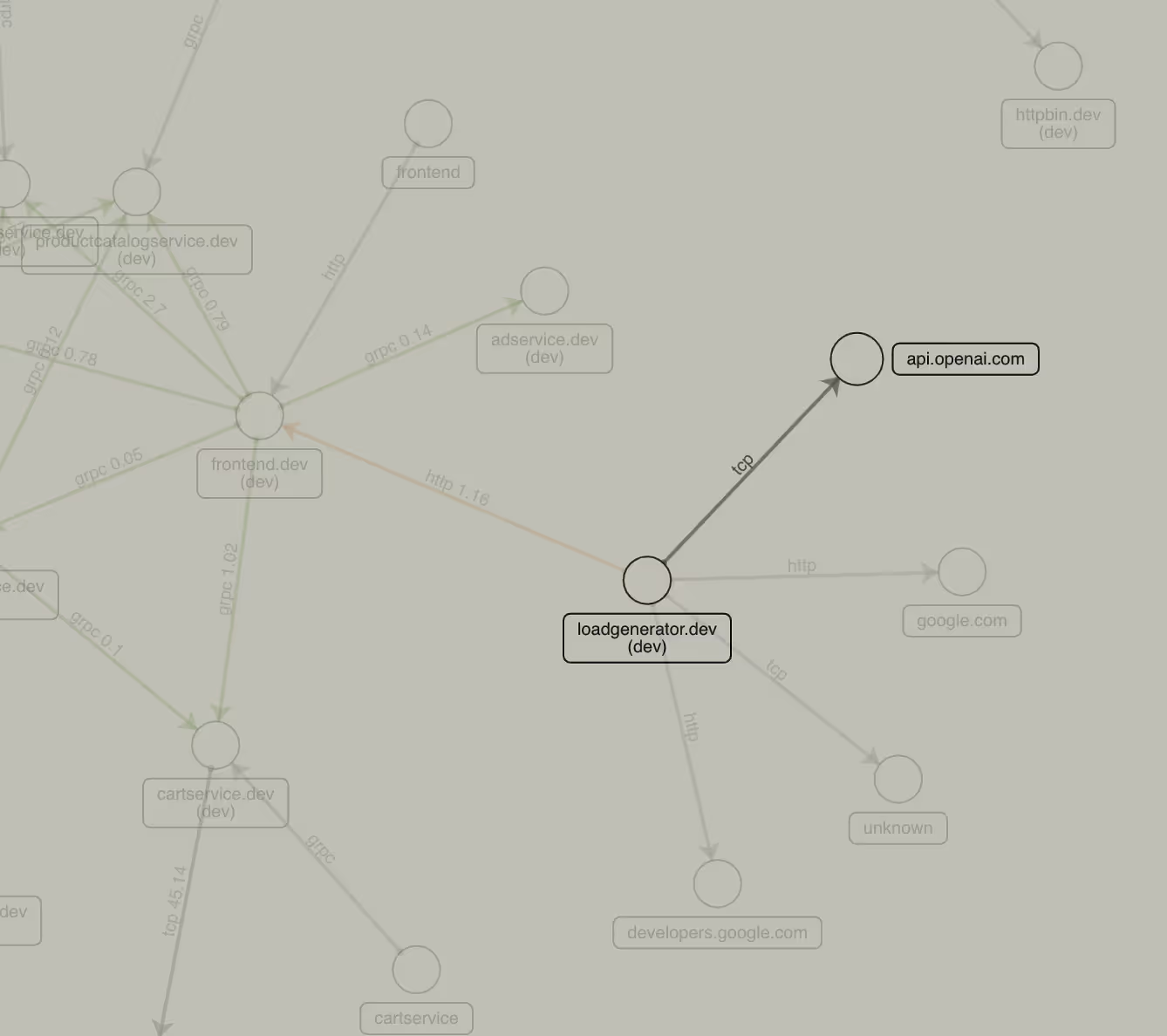

When organizations think about API security for their applications and services, they typically focus on just the public API endpoints. However, today’s application stacks have multiple layers that run deeper beyond the traditional public API endpoints that would typically front some monolithic application middleware and a database layer. Cloud-native microservices and containerized applications on the other hand end up talking to each other via multiple internal APIs developed in-house as well as a variety of 3rd party APIs - be it 3rd party object stores like S3 buckets, data lakes or streaming services, or 3rd party SaaS services like Twilio, Stripe and most recently OpenAI.

These multi-layer distributed application architectures disrupt the traditional trust boundaries which used to be at the public API layer alone, instead now proliferating wherever data flows within internal and external networks. Modern API and data flows are highly dynamic and are constantly evolving at runtime as internal and external API dependencies change at a fast pace thereby changing what data flows into and out of these API endpoints. This mandates the need for API security to extend beyond public APIs alone to in turn secure all the APIs in today’s multi-layer application stacks including internal and external 3rd party APIs. The arrival of LLMs have only compounded the risks of 3rd party APIs, especially when it comes to private data leakage in the public domain [we will dive deeper into LLM security in an upcoming post].

2. Security Gaps Created by Using External Publicly Available APIs

Applications integrate with SaaS services like Twilio, Stripe, and more recently, OpenAI and other LLMs over their public APIs. This usually means that applications end up sending internal organization data or even customer data to 3rd party APIs for processing, which has implications for both API and data security as well as regulatory compliance.

While many of the best practices around API authentication, authorization, and use of encryption are mandated for an organization’s own public APIs, best practices for communication with 3rd party APIs are often overlooked which can have dangerous outcomes, including:

- Applications sending private customer data over unencrypted connections without mandating encrypted communication channels could easily be sniffed and stolen.

- Authentication and authorization implementations in 3rd party APIs are a blind spot. They could be unimplemented or buggy - like for e.g., 3rd party API calls could be left open or may not require or appropriately check for API key expiration. Gaps in authentication and authorization checks can leave an organization’s internal applications vulnerable to lateral movement attacks as compromised keys could be exploited to steal data within 3rd party apps.

- Internal services deployed on platforms like Kubernetes as containers leave other pathways open for attackers to access 3rd party APIs. Containers could have unpatched vulnerabilities or unencrypted secrets that could be compromised by attackers to move laterally through the internal networks and gain unauthorized access to 3rd party apps.

With the introduction of LLMs, organizations ought to be even more concerned about private data and code leaks from their internal organizations becoming part of the public domain via trained embeddings within public LLM models for anyone on the internet to see. While employee access to public LLM APIs from their development laptops is a concern that has gained the attention of IT security teams, similar access from within a company’s product stacks to external LLM APIs that supplement previous in-house backend code with LLM capabilities could become an even bigger blind spot for application security teams, as feature development with GenAI tooling moves at a frenzied pace within organizations. This has resulted in many organizations entirely blocking access to public LLM APIs.

3. Security Gaps Created by 3rd party APIs as applications get packaged for deployment (internal to an organization)

Usually, this sort of internal deployment of 3rd party applications - be it open source, or private installations of 3rd party services like databases or a private instance of a closed source OpenAI LLM model is intuitively considered to be more secure, as the data private to an organization does not leave an organization’s networks. However, this assumed sense of security should be taken with a huge grain of salt!

3rd party applications and container packages are still a black box which could introduce their own set of vulnerabilities. Besides the container and package level vulnerabilities that they could introduce, the containers could have runtime misconfigurations, over privileged, and over permissioned service accounts and access keys that could expand the attack surface for an organization’s Kubernetes clusters if they were to be compromised.

While 3rd party services and APIs introduce their own set of vulnerabilities and security headaches, there is no doubt that their use is only going to grow over time as both LLMs and other SaaS services can vastly improve an organization’s productivity and unlock new features in the long run. So how do application security teams enable the use of such forward thinking technologies while still keeping organizational security and compliance needs front and center?

We suggest two ways of addressing the security concerns introduced by 3rd party APIs while continuing to support the accelerated pace of development that they enable.

SOLUTION ONE: Continuous visibility into which third party APIs and services have been accessed and who is accessing them.

Tracking which 3rd party services are being used internally and externally at runtime is the first step towards securing those accesses. While security teams might be aware of their public API endpoints as they are somewhat involved in testing them for security loopholes via API testing tools, tracking which 3rd party APIs are being used in their application backends and which data flows might have private customer or organizational data is still a glaring blind spot.

Security teams should also consider tracking the use of these APIs on a historical basis and gauge any drift from previously known 3rd party API used, both from the perspective of which API was used and also which internal services or identities ended up using the API. Unknown API calls could indicate a new security risk as it could be a sign of data exfiltration to a malicious endpoint, while a drift in the internal services calling external API endpoints might be an indication of a remote code injection attack via a vulnerable container process.

SOLUTION TWO: Enforcing least privilege for 3rd party software containers.

When 3rd party services end up getting deployed within internal Kubernetes clusters, it is important to track the permissions and privileges used by their containers at runtime to ensure that they do not leave open pathways for potential attackers to move laterally into other containers and namespaces leaving the entire cluster vulnerable. By enforcing least privilege, security teams can contain the blast radius of resources vulnerable to an attack in case vulnerabilities within 3rd party container images that they do not control end up getting exploited. An example of runtime enforcements that security teams could use would be to prevent 3rd party containers using over permissioned service accounts from getting deployed in the clusters in the first place.

While a combination of open source and home grown approaches could be used to custom build these solutions, it will be highly onerous to keep them updated with the fast pace of application development as the instrumentation and custom configurations will need constant updating creating more eng work and leaving a revolving door of vulnerabilities waiting in line for new security configs to catch up with the rest of the development road map. Operant’s capabilities are available with just a single-click deployment and automatically update without drift as your cloud-native environment grows.

Conclusions

Security teams, platform teams, developers and engineering leaders need to act quickly - to match the pace of security with the pace of application development with 3rd party APIs, especially as adoption of LLMs quickens. Attackers are already way ahead of where the defenses are, and many teams are at risk of falling further and further behind as they extend the “black boxes” deeper into their stacks without the teams or the knowledge or the transparency to manage them.

However, security teams should avoid feeling paralyzed by the arrival of these black box APIs, but instead bring foundational security controls that they already know of to the front and center of their application security roadmap.

To learn more about how Operant secures 3rd party APIs and services better than anything else out there, view a video demo or request a trial.

Message us at hello@operant.ai to start a conversation.

3%20%3D(Art)Kubed%20(16%20x%209%20in)%20(7)-p-1080.avif)

.png)

.png)