Operant AI Featured in Gartner’s Software Supply Chain Security Playbook

Evaluate your spending

Imperdiet faucibus ornare quis mus lorem a amet. Pulvinar diam lacinia diam semper ac dignissim tellus dolor purus in nibh pellentesque. Nisl luctus amet in ut ultricies orci faucibus sed euismod suspendisse cum eu massa. Facilisis suspendisse at morbi ut faucibus eget lacus quam nulla vel vestibulum sit vehicula. Nisi nullam sit viverra vitae. Sed consequat semper leo enim nunc.

- Lorem ipsum dolor sit amet consectetur lacus scelerisque sem arcu

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic elementum purus

- Eget at suscipit et diam cum. Mi egestas curabitur diam elit

Lower energy costs

Lacus sit dui posuere bibendum aliquet tempus. Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget. Quisque scelerisque sit elit iaculis a.

Have a plan for retirement

Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget.

Plan vacations and meals ahead of time

Massa dui enim fermentum nunc purus viverra suspendisse risus tincidunt pulvinar a aliquam pharetra habitasse ullamcorper sed et egestas imperdiet nisi ultrices eget id. Mi non sed dictumst elementum varius lacus scelerisque et pellentesque at enim et leo. Tortor etiam amet tellus aliquet nunc eros ultrices nunc a ipsum orci integer ipsum a mus. Orci est tellus diam nec faucibus. Sociis pellentesque velit eget convallis pretium morbi vel.

- Lorem ipsum dolor sit amet consectetur vel mi porttitor elementum

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic interdum id risus laoreet

- Amet blandit at sit id malesuada ut arcu molestie morbi

Sign up for reward programs

Eget aliquam vivamus congue nam quam dui in. Condimentum proin eu urna eget pellentesque tortor. Gravida pellentesque dignissim nisi mollis magna venenatis adipiscing natoque urna tincidunt eleifend id. Sociis arcu viverra velit ut quam libero ultricies facilisis duis. Montes suscipit ut suscipit quam erat nunc mauris nunc enim. Vel et morbi ornare ullamcorper imperdiet.

Today, we’re proud to announce that Operant AI has been named as a representative vendor in Gartner’s Software Supply Chain Security Playbook.

As Gartner warns that 80% of organizations will face software supply chain attacks by 2028, with over 700,000 malicious packages already identified and AI-native threats like model poisoning and prompt injection exploding, enterprises can't afford security built for yesterday's risks. As enterprises increasingly integrate open-source large language models (LLMs) and AI-native development tools, their exposure grows exponentially.

Gartner stated that “Technology organizations have heavily adopted open-source LLMs, but they bring another vector for supply chain security risk, including vulnerable pretraining (model poisoning), model licensing restrictions, and weak model provenance.” And also advised "Software engineering leaders who are using open-source AI repositories, such as Hugging Face, TensorFlow Hub, or PyTorch Hub, to begin evaluating the security risk of using third-party AI models."

Operant AI's purpose-built platform protects what traditional tools miss: the entire AI supply chain from open-source LLM validation and model provenance verification to runtime agent protection and MCP security, delivering the comprehensive, AI-native defense that Gartner's research confirms is no longer optional, but essential for any organization deploying AI at scale.

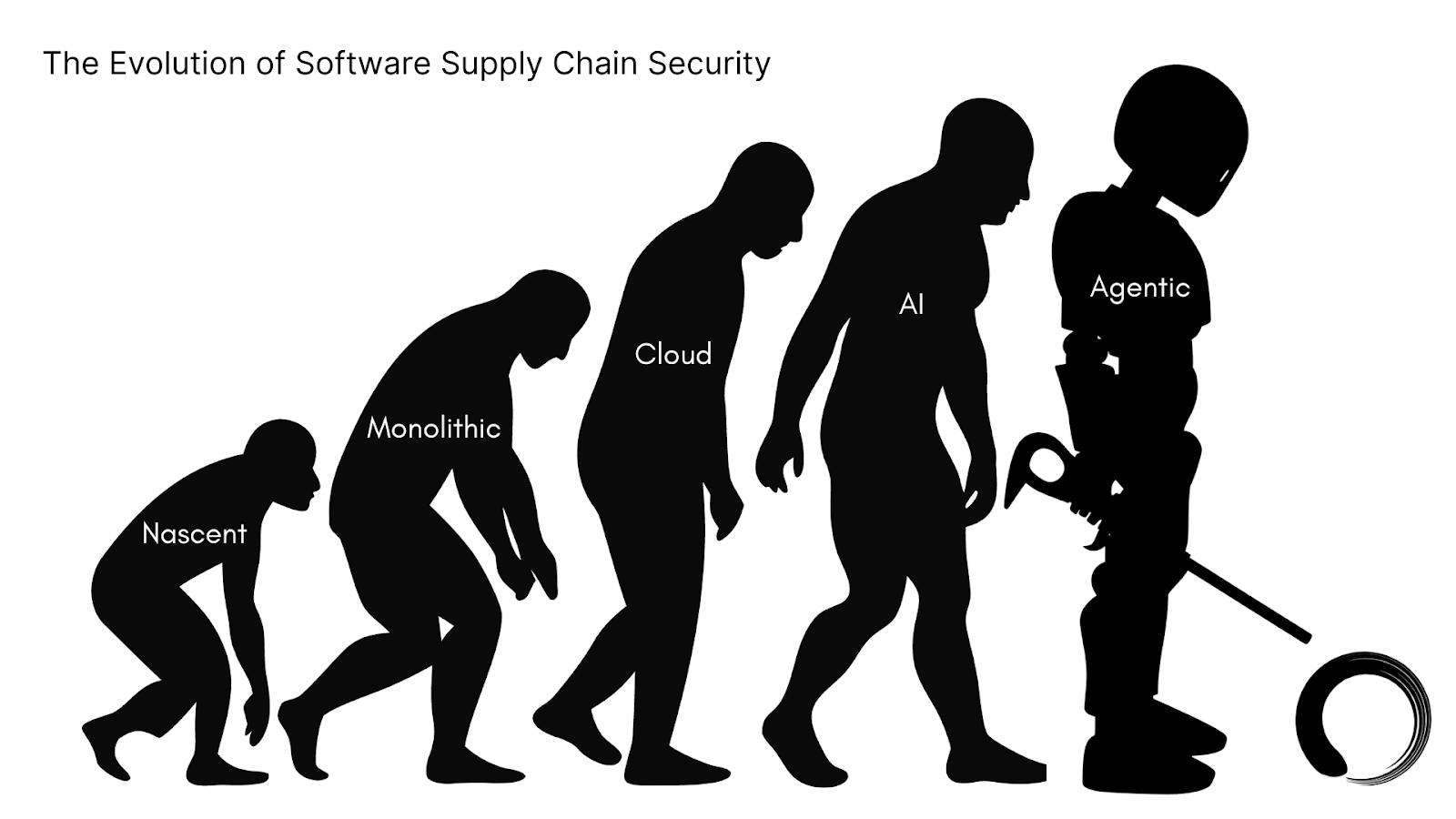

The Modernization Journey: From Monolith to AI Agents

The evolution of software supply chain security reflects the broader transformation of software development itself; a journey from controlled, on-premises environments to today's distributed, AI-powered reality. The software supply chain security challenge we face today is the culmination of three decades of transformation, each wave bringing new complexity and new vulnerabilities.

Legacy Era – The Static Supply Chain: In the nascent and monolithic traditional software development era, security was straightforward. Security is centered on code scanning and dependency checks. Effective for monolithic apps but blind to dynamic behaviors.

Cloud Era – Continuous Delivery: Cloud computing shattered this model. Suddenly, code repositories lived in GitHub, builds ran in CI/CD pipelines across AWS and Azure, and open-source dependencies numbered in the hundreds per project. To keep up, CI/CD and container scanning tools have emerged, yet Gartner now stresses that “container image scanning tools in the development phase help detect known vulnerabilities, but teams must deploy tools to visualize container traffic, identify cluster misconfigurations, and alert on anomalous behavior.” Translating that protection must move beyond build-time to runtime.

AI Era – Runtime Agents and LLM Pipelines: The integration of AI into development workflows has introduced entirely new dimensions of risk. Today’s attacks exploit the runtime layer, from prompt injection in AI-native IDEs like Cursor and Windsurf to compromised tools and agents chaining together via MCP. Traditional supply chain security tools, designed for static code analysis, are blind to these runtime threats. They can't see what an agent does when it executes, what data it accesses, or how it responds to malicious prompts. Securing AI means defending live model behavior, data flows, and tool interconnectivity, not just static code.

As the report states, "software engineering teams are ill-equipped to detect and respond to anomalies," yet "malicious attacks on software development pipelines are highly likely to surface as anomalous activities." You can't scan an agent for threats before deployment and assume it's safe; you need continuous, runtime monitoring of its behavior, data access patterns, and model interactions.

This is the fundamental shift: from "trust but verify before deployment" to "verify continuously during execution."

Why Operant AI is Essential for Your LLM Software Supply Chain

Operant AI is proud to be the only vendor featured across Gartner’s *four* critical reports for AI Security in 2025: AI Trust, Risk, and Security Management Market Guide (AI TRiSM), API Protection Market Guide, MCP Gateways, and How to Secure Custom-Built AI Agents.

The Software Supply Chain Security Playbook adds critical new context to the importance of evaluating and securing open-source LLMs in real-time to mitigate the new class of supply chain threats that dominate in the Age of AI.

Operant AI’s comprehensive runtime protection across every step of the AI supply chain is the only way to operationalize supply chain security in the world of AI and MCP.

Purpose-Built for AI Supply Chain Security

Critical LLM Risk Protection

Gartner identifies unique risks from open-source LLMs, including vulnerable pretraining (model poisoning), model licensing restrictions, and weak model provenance. Operant AI specifically addresses these AI-native threats that traditional security tools completely miss.

Comprehensive AI Security Coverage

Organizations using open-source AI repositories like Hugging Face, TensorFlow Hub, or PyTorch Hub need to evaluate the security risk of third-party AI models - and Operant AI provides dedicated software supply chain security (SSCS) for analyzing these models.

Why This Matters Now

Exponential Growth in Malicious Packages

There have been over 700,000 malicious software packages identified since 2019, and they have been exponentially increasing. Your LLM supply chain is under constant attack.

IDE Compromise Risk

Recent threats include attacks on AI-native IDEs like Cursor and Windsurf through prompt injection, demonstrating that AI development environments face unique, emerging threats requiring specialized protection.

Traditional Tools Fall Short

Software supply chain attacks include everything from injecting malicious code into open-source packages to establishing backdoors in post-deployment software updates. Traditional security simply wasn't designed for AI.

What Operant AI Protects

Based on Gartner's framework, customers using Operant AI gain security across the entire AI supply chain:

Operant AI secures the entire AI supply chain with three core platforms:

- Operant AI Gatekeeper protects LLMs from prompt injection, jailbreaks, and data leakage with real-time threat detection and automatic PII redaction

- Operant MCP Gateway secures agentic AI by monitoring agent behavior, controlling tool invocations, and preventing attacks like Shadow Escape that manipulate agents to exfiltrate data

- Operant 3D Runtime Defense Platform protects APIs, containers, and Kubernetes workloads from compromise.

This comprehensive approach prevents supply chain attacks from compromised LLMs, malicious dependencies, API exploitation, and container vulnerabilities—enabling organizations to deploy AI safely with provable compliance and AI governance.

The Business Case

Prevent Catastrophic Breaches

Examples like the NPM (2025), Discord (2024), Okta (2023), and Codecov (2021) attacks demonstrate how supply chain compromises lead to stolen credentials, accessed source code, and widespread customer data exposure.

Enable Safe AI Innovation

With Operant AI securing your LLM supply chain, your teams can:

- Adopt open-source AI models with confidence

- Accelerate AI development without security delays

- Meet compliance requirements for AI deployments

- Reduce risk while maintaining innovation velocity

Bottom Line

Software teams must assume that tooling, including all code (both externally sourced and internally developed) and development environments, is already compromised.

With open-source LLMs introducing entirely new attack vectors, you need security purpose-built for AI. Operant AI is that solution.

Don't wait for an AI supply chain breach to expose your organization. Protect your LLM deployments with the security solution recognized by Gartner as a leader in this critical space.

Ready to secure your AI supply chain? Contact Operant AI today.

3%20%3D(Art)Kubed%20(16%20x%209%20in)%20(7)-p-1080.avif)

.png)

.avif)